Differentiaali- ja integraalilaskenta

| Site: | MyCourses |

| Course: | MS-A0101 - Differentiaali- ja integraalilaskenta 1 (TFM), Luento-opetus, 13.9.2021-27.10.2021 |

| Book: | Differentiaali- ja integraalilaskenta |

| Printed by: | Guest user |

| Date: | Sunday, 30 June 2024, 3:36 PM |

Description

Englanninkielisen MOOC-kurssin luentomateriaali, joka perustuu tämän kurssin luentoihin. Mukana on interaktiivisia JSXGraph-kuvia, joita ei ole suomenkielisissä luentokalvoissa. Tässä vaiheessa vain luvut 1, 2, 7 ja 9 ovat suomeksi.

1. Jonot

Sisältö

- Peruskäsitteet

- Tärkeitä jonoja

- Suppeneminen ja raja-arvo

Jonot

Tämä luku sisältää tärkeimmät jonoihin liittyvät käsitteet. Käsittelemme käytännössä vain reaalilukujonohin liittyviä asioita.

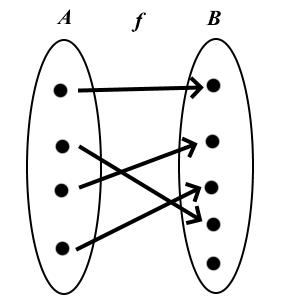

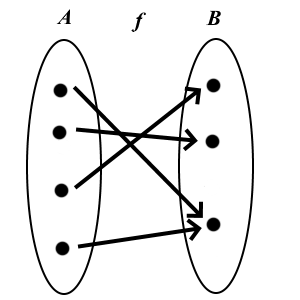

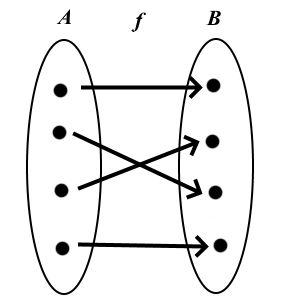

Määritelmä: Jono

Olkoon \(M\) epätyhjä joukko. Jono on funktio

\[f:\mathbb{N}\rightarrow M.\]

Usein käytetään nimitystä jono joukossa \(M\).Huom. Koska \(\mathbb{N}\) on järjestetty joukko, niin myös jonon termeillä \( f(n)\) on vastaava järjestys. Sen sijaan joukon alkioilla ei yleisessä tapauksessa

ole määrättyä järjestystä.

Määritelmä: Jonon termit ja indeksit

Jonoille voidaan käyttää myös merkintöjä

\((a_{1}, a_{2}, a_{3}, \ldots) = (a_{n})_{n\in\mathbb{N}} = (a_{n})_{n=1}^{\infty} = (a_{n})_{n}\)

muodon \(f(n)\) sijaan. Luvut \(a_{1},a_{2},a_{3},\ldots\in M\) ovat jonon termejä.

Funktion \[\begin{aligned} f:\mathbb{N} \rightarrow & M \\ n \mapsto & a_{n}\end{aligned}\] perusteella jokaiseen jonon termiin liittyy yksikäsitteinen lukua \(n\in\mathbb{N}\) to each term. Se merkitään alaindeksinä ja sitä kutsutaan vastaavan jonon termin indeksiksi; jokainen jonon termi voidaan siis tunnistaa sen indeksin avulla.

| n | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | \(\ldots\) |

|---|---|---|---|---|---|---|---|---|---|---|

| \(\downarrow\) | \(\downarrow\) | \(\downarrow\) | \(\downarrow\) | \(\downarrow\) | \(\downarrow\) | \(\downarrow\) | \(\downarrow\) | \(\downarrow\) | ||

| \(a_{n}\) | \(a_{1}\) | \(a_{2}\) | \(a_{3}\) | \(a_{4}\) | \(a_{5}\) | \(a_{6}\) | \(a_{7}\) | \(a_{8}\) | \(a_{9}\) | \(\ldots\) |

Esimerkkejä

Esimerkki 1: Luonnollisten lukujen jono

Jono \((a_{n})_{n}\), joka on määritelty kaavalla \(a_{n}:=n,\,n\in \mathbb{N}\) on nimeltään luonnollisten lukujen jono. Sen ensimmäiset termit ovat:

\[a_1=1,\, a_2=2,\, a_3=3, \ldots\]

![]()

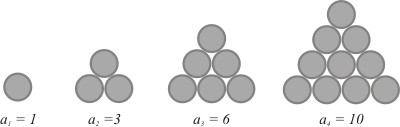

Esimerkki 2: Kolmiolukujen jono

Kolmioluvut saavat nimensä seuraavasta geometrisesta periaatteesta: Asetetaan sopiva määrä kolikoita niin, että syntyy yhä suurempia tasasivuisia kolmioita:

Ensimmäisen kolikon alle lisätään kaksi kolikkoa, jolloin toisessa vaiheessa saadaan \(a_2=3\) kolikkoa. Seuraavaksi tämän kolmion alle lisätään kolme uutta kolikkoa, joita on nyt yhteensä \(a_3=6\).

Etenemällä samaan tapaan huomataan, että esimerkiksi 10. kolmioluku saadaan laskemalla yhteen 10 ensimmäistä luonnollista lukua:

\[a_{10} = 1+2+3+\ldots+9+10.\] Yleinen kaava kolmiolukujonon termeille on \(a_{n} = 1+2+3+\ldots+(n-1)+n\). Kolmioluvuille käytetään yleensä merkintää \(T_n\) (T = 'Triangle').

Tämä motivoi seuraavaan määritelmään:

Määritelmä: Summajono

Olkoon \((a_n)_n, a_n: \mathbb{N}\to M\) jono joukossa \( M\), jossa on määritelty yhteenlasku. Merkitään \[a_1 + a_2 + a_3 + \ldots + a_{n-1} + a_n =: \sum_{k=1}^n a_k.\] Symboli \(\sum\) on kreikkalainen kirjain sigma. Summausindeksi \(k\) kasvaa alkuarvosta 1 loppuarvoon \(n\).

Summajono saadaan siis alkuperäisestä jonosta laskemalla alkupään termejä yhteen aina yksi termi eteenpäin. Varsinkin sarjojen kohdalla käytetään nimeä osasummajono.

Kolmiolukujen yleinen kaava voidaan siis kirjoittaa muodossa

\[T_n = \sum_{k=1}^n k\] ja kyseessä on luonnollisten lukujen jonon summajono.

Esimerkki 3: Neliölukujen jono

Neliölukujen jono \((q_n)_n\) määritellään kaavalla \(q_n=n^2\). Tämän jonon termejä voidaan havainnollistaa asettelemalla kolikoita neliön muotoon.

Yksi mielenkiintoinen havainto on se, että kahden peräkkäisen kolmioluvun summa on aina neliöluku. Esimerkiksi \(3+1=4\) ja \(6+3=9\). Yleisesti määritelmiä käyttämällä

voidaan osoittaa, että

\[q_n=T_n + T_{n-1}.\]

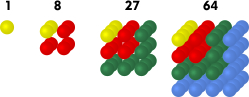

Esimerkki 4: Kuutiolukujen jono

Vastaavasti kuutiolukujen jono määritellään kaavalla \[a_n := n^3.\] Jonon ensimmäiset termit ovat silloin \((1,8,27,64,125,\ldots)\).

Esimerkki 5.

Olkoon \((q_n)_n\) with \(q_n := n^2\) neliölukujen jono \[\begin{aligned}(1,4,9,16,25,36,49,64,81,100 \ldots)\end{aligned}\] ja määritellään funktio \(\varphi(n) = 2n\). Jonosta \((q_{2n})_n\) saadaan \[\begin{aligned}(q_{2n})_n &= (q_2,q_4,q_6,q_8,q_{10},\ldots) \\ &= (4,16,36,64,100,\ldots).\end{aligned}\]

Määritelmä: Erotusjono (differenssijono)

Jonon \((a_{n})_{n}=a_{1},\, a_{2},\, a_{3},\ldots,\, a_{n},\ldots\) termeistä voidaan myös muodostaa peräkkäisten termien erotuksia: \[(a_{n+1}-a_{n})_{n}:=a_{2}-a_{1}, a_{3}-a_{2},\dots\] on nimeltään alkuperäisen jonon \((a_{n})_{n}\) ensimmäinen differenssijono.

Ensimmäisen differenssijonon ensimmäinen differenssijono on alkuperäisen jonon toinen differenssijono. sequence. Vastaavalla tavalla määritellään jonon \(n.\) differenssijono.

Esimerkki 6.

Tarkastellaan jonoa \((a_n)_n\), jossa \(a_n := \frac{n^2+n}{2}\), eli \[\begin{aligned}(a_n)_n &= (1,3,6,10,15,21,28,36,\ldots)\end{aligned}\] Olkoon \((b_n)_n\) sen 1. differenssijono. Silloin \[\begin{aligned}(b_n)_n &= (a_2-a_1, a_3-a_2, a_4-a_3,\ldots) \\ &= (2,3,4,5,6,7,8,9,\ldots)\end{aligned}\] Termin \((b_n)_n\) yleinen muoto on \[\begin{aligned}b_n &= a_{n+1}-a_{n} \\ &= \frac{(n+1)^2+(n+1)}{2} - \frac{n^2+n)}{2} \\ &= \frac{(n+1)^2+(n+1)-n^2 - n }{2} \\ &= \frac{(n^2+2n+1)+1-n^2}{2} \\ &= \frac{2n+2}{2} \\ &= n + 1.\end{aligned}\]

Eräitä tärkeitä jonoja

Eräät jonot ovat keskeisiä monille matemaattisille malleille ja niiden käytännön sovelluksille muilla aloilla kuten luonnontieteissä ja taloustieteissä.) Seuraavassa tarkastellaan kolme tällaista jonoa: aritmeettinen jono, geometrinen jono ja Fibonaccin lukujono.

Aritmeettinen jono

Aritmeettinen jono voidaan määritellä monella eri tavalla:Määritelmä A: Aritmeettinen jono

Jono \((a_{n})_{n}\) on aritmeettinen, jos sen peräkkäisten termien erotus \(d \in \mathbb{R}\) on vakio, t.s. \[a_{n+1}-a_{n}=d \text{ ja } d=vakio.\]

Huomautus: Aritmeettisen jonon eksplisiittinen kaava seuraa suoraan määritelmästä A: \[a_{n}=a_{1}+(n-1)\cdot d.\] Aritmeettisen jonon \(n.\) termi voidaan laskea myös palautuskaavan (eli rekursiokaavan) avulla: \[a_{n+1}=a_n + d.\]

Määritelmä B: Aritmeettinen jono

Jono \((a_{n})_{n}\) on aritmeettinen jono, jos sen ensimmäinen differenssijono on vakiojono.

Tämä määritelmä selventää myös aritmeettisen jonon nimen: Kolmen peräkkäisen termin keskimmäinen luku on kahden muun termin aritmeettinen keskiarvo; esimerkiksi

\[a_2 = \frac{a_1+a_3}{2}.\]

Esimerkki 1.

Luonnollisten lukujen jono \[(a_n)_n = (1,2,3,4,5,6,7,8,9,\ldots)\] on aritmeettinen, koska peräkkäisten termien erotus on \(d=1\).

Geometrinen jono

Myös geometrisella jonolla on useita erilaisia määritelmiä:

Määritelmä: Geometrinen jono

Jono \((a_{n})_{n}\) on geometrinen, jos kahden präkkäisen termin suhde on aina vakio \(q\in\mathbb{R}\), t.s. \[\frac{a_{n+1}}{a_{n}}=q \text{ kaikille } n\in\mathbb{N}.\]

Huomautus. Palautuskaava \(a_{n+1} = q\cdot a_n \) geometrisen jonon termeille ja myös eksplisiittinen lauseke \[a_n=a_1\cdot q^{n-1}\] seuraavat suoraan määritelmästä.

Myös tässä jonon nimityksellä on looginen tausta: Kolmen peräkkäisen termin keskimmäinen luku on aina kahden muun termin geometrinen keskiarvo; esimerkiksi \[a_2 = \sqrt{a_1\cdot a_3}.\]

Esimerkki 2.

Olkoon \(a\in\mathbf{R}\) ja \(q\neq 0\). Jono \((a_n)_n\), jolle \(a_n := aq^{n-1}\), eli \[\left( a_1, a_2, a_3, a_4,\ldots \right) = \left( a, aq, aq^2, aq^3,\ldots \right),\] on geometrinen jono. Jos \(a> 0\) ja \(q\geq1\), niin jono on aidosti kasvava. Jos \(a>0\) ja \(q<1\), niin se on aidosti vähenevä. Jonon alkioiden muodostama joukko \({a,aq,aq^2, aq^3}\) on äärellinen, jos \(q=1\) (jolloin sen ainoa alkio on \(a\)), muuten tämä joukko on ääretön.

Fibonaccin jono

Fibonaccin lukujono on kuuluisa sen biologisten sovellusten vuoksi. Se esiintyy mm.eliöiden populaation kasvun yhteydessä ja kasvien rakenteessa. Palautuskaavaan perustuva määritelmä on seuraava:

Määritelmä: Fibonaccin jono

Olkoon \(a_0 = a_1 = 1\) ja \[a_n := a_{n-2}+a_{n-1},\] kun \(n\geq2\). Jono \((a_n)_n\) on Fibonaccin lukujono. Jonon termit ovat Fibonaccin lukuja.

Jonon nimen takana on italialainen Leonardo Pisano (1200-luvulla), latinalaiselta nimeltään Filius Bonacci. Hän tutki kaniparien lisääntymistä idealisoidussa tilanteessa, jossa kanit eivät kuole ja kaikki vanhat sekä uudet parit lisääntyvät säännöllisin väliajoin. Näin hän päätyi jonoon \[(1,1,2,3,5,8,13,21,34,55,\ldots).\]

Esimerkki 3.

Auringonkukan kukat muodostuvat kahdesta spiraalista, jotka aukeavat keskeltä vastakkaisiin suuntiin: 55 spiraalia myötäpäivään ja 34 vastapäivään.

Myös ananashedelmän pinta käyttäytyy samalla tavalla. Siinä on 21 spiraalia yhteen suuntaan ja 34 vastakkaiseen. Myös joissakin kaktuksissa ja havupuiden kävyissä on samanlaisia rakenteita.

Suppeneminen, hajaantuminen ja raja-arvo

Tässä luvussa käsitellään jonon suppenemista. Aloitamme nollajonon käsitteestä ja siirrymme sen avulla yleiseen suppenemisen käsitteeseen.

Huomautus: Itseisarvo joukossa \(\mathbb{R}\)

Itseisarvofunktio \(x \mapsto |x|\) on keskeisessä asemassa jonojen suppenemisen tutkimisessa. Seuraavassa käydään läpi sen tärkeimmät ominaisuudet:

Määritelmä: Itseisarvo

Reaaliluvun \(x\in\mathbb{R}\) itseisarvo \(|x|\) on \[\begin{aligned}|x|:=\begin{cases}x, & \text{jos }x\geq0,\\ -x, & \text{jos }x<0.\end{cases}\end{aligned}\]

Itseisarvofunktion kuvaaja

Lause: Itseisarvon laskusääntöjä

Kaikille reaaliluvuille \(x,y\in\mathbb{R}\) pätee:

-

\(|x|\geq0,\)

-

\(|x|=0\) täsmälleen silloin, kun \(x=0.\)

-

\(|x\cdot y|=|x|\cdot|y|\) (multiplikatiivisuus)

-

\(|x+y|\leq|x|+|y|\) (kolmioepäyhtälö)

Kohdat 1.-3. Results follow directly from the definition and by dividing it up into separate cases of the different signs of \(x\) and \(y\)

Kohta 4. Tämä kohta voidaan todistaa neliöön korottamalla tai tutkimalla kaikki eri vaihtoehdot kuten alla.

Tapaus 1.

Olkoot \(x,y \geq 0\). Silloin \[\begin{aligned}|x+y|=x+y=|x|+|y|\end{aligned}\] ja kaava pätee.

Tapaus 2.Olkoot seuraavaksi \(x,y < 0\). Silloin \[\begin{aligned}|x+y|=-(x+y)=(-x)+ (-y)=|x|+|y|.\end{aligned}\]

Tapaus 3.Tutkitaan lopuksi tapaus \(x\geq 0\) and \(y<0\), joka jakaantuu kahteen alakohtaan:

-

Jos \(x \geq -y\), niin \(x+y\geq 0\) ja siten \(|x+y|=x+y\) määritelmän perusteella. Koska \(y<0\), niin \(y<-y\) ja sen vuoksi \(x+y < x-y\). Siis \[\begin{aligned}|x+y| = x+y < x-y = |x|+|y|.\end{aligned}\]

-

Jos \(x < -y\), niin \(x+y<0\), ja tällöin \(|x+y|=-(x+y)=-x-y\). Koska \(x\geq0\), niin \(-x < x\) ja siten \(-x-y\leq x-y\). Siispä \[\begin{aligned}|x+y| = -x-y \leq x-y = |x|+|y|.\end{aligned}\]

Jos \(x<0\) ja \(y\geq0\), niin väite seuraa samalla periaatteella kuin tapauksessa 3, kun vaihdetaan keskenään \(x\) ja \(y\).

\(\square\)

Nollajono

Määritelmä: Nollajono

Jono \((a_{n})_{n}\) on nollajono, jos jokaista \(\varepsilon>0,\) vastaa sellainen indeksi \(n_{0}\in\mathbb{N}\), että \[|a_{n}| < \varepsilon\] kaikille \(n\geq n_{0},\, n\in\mathbb{N}\). Tällöin sanotaan, että jono suppenee kohti nollaa.

Intuitiivisesti: Nollajonon termit menevät mielivaltaisen lähelle nollaa, kun jonossa mennään riittävän pitkälle.

Esimerkki 1.

Jono \((a_n)_n\), joka on määritelty kaavalla \(a_{n}:=\frac{1}{n}\), eli \[\left(a_{1},a_{2},a_{3},a_{4},\ldots\right):=\left(\frac{1}{1},\frac{1}{2},\frac{1}{3},\frac{1}{4},\ldots\right)\] on nimeltään harmoninen jono. Jonon termit ovat positiivisia kaikilla \(n\in\mathbb{N}\), mutta indeksin \(n\) kasvaessa jonon termit pienenevät yhä lähemmäksi

nollaa.

Jos esimerkiksi \(\varepsilon := \frac{1}{5000}\), niin valinnalla \(n_0 = 5001\) pätee \(a_n<\frac{1}{5000}=\varepsilon\) aina, kun \(n\geq n_0\).

Harmoninen jono suppenee kohti nollaa

Esimerkki 2.

Tarkastellaan jonoa \[(a_n)_n \text{ jossa } a_n:=\frac{1}{\sqrt{n}}.\] Olkoon \(\varepsilon := \frac{1}{1000}\). Tällöin valinnalla \(n_0=1000001\) kaikille termeille \(a_n\), joissa \(n\geq n_0\), pätee \(a_n < \frac{1}{1000}=\varepsilon\).

Note. Tutkittaessa nollajono-ominaisuutta täytyy tutkia mielivaltaista lukua \(\varepsilon \in \mathbb{R}\), jolle \(\varepsilon > 0\). Sen jälkeen yritetään valita sellainen indeksi \(n_0\), josta alkaen jokainen \(|a_n|\) on pienempi kuin \(\varepsilon\).

Esimerkki 3.

Tarkastellaan jonoa \((a_n)_n\), jossa \[a_n := \left( -1 \right)^n \cdot \frac{1}{n^2}.\]

Kerrointen \((-1)^n\) vuoksi jonon kaksi peräkkäistä termiä ovat aina erimerkkisiä; tällaista jonoa kutsutaan yleisemmin vuorottelevaksi jonoksi.

Osoitetaan, että kyseessä on nollajono. Määritelmän mukaan jokaista \(\varepsilon > 0\) täytyy vastata sellainen \(n_0 \in \mathbb{N}\), että epäyhtälö \[|a_n|< \varepsilon\] pätee kaikille niille termeille \(a_n\), joissa \(n\geq n_0\).

Olkoon siis \(\varepsilon > 0\) mielivaltainen. Koska epäyhtälön \( |a_n|< \varepsilon\) täytyy olla voimassa kaikille \(\varepsilon>0\), indeksin \(n_0\) täytyy riippua luvusta \(\varepsilon\). Tarkemmin: Epäyhtälön \[|a_{n_0}|=\left| \frac{1}{{n_0}^2} \right|= \frac{1}{{n_0}^2}<\varepsilon\] täytyy toteutua indeksillä \(n_0\). Ratkaistaan \(n_0\):

\[n_0 > \frac{1}{\sqrt{\varepsilon}}.\]. Mikä tahansa tämän ehdon toteuttava indeksi \(n_0\) kelpaa valinnaksi, kun \(\varepsilon > 0\) on alussa kiinnitetty.

Hajaantuvia esimerkkejä

Seuraavat kaavat eivät johda nollajonoon:

-

\(a_n = (-1)^n\)

-

\(a_n = (-1)^n \cdot n\)

Lause: Nollajonojen ominaisuuksia

Olkoot \((a_n)_n\) ja \((b_n)_n\) jonoja. Silloin pätee:

-

Jos \((a_n)_n\) on nollajono ja joko \(b_n = a_n\) tai \(b_n = -a_n\) kaikilla \(n\in\mathbb{N}\), niin \((b_n)_n\) on myös nollajono.

-

Jos \((a_n)_n\) on nollajono ja \(-a_n\leq b_n \leq a_n\) kaikilla \(n\in\mathbb{N}\), niin \((b_n)_n\) on myös nollajono.

-

Jos \((a_n)_n\) on nollajono, niin \((c\cdot a_n)_n\), \(c \in \mathbb{R}\), on myös nollajono.

-

Jos \((a_n)_n\) ja \((b_n)_n\) ovat nollajonoja, niin \((a_n + b_n)_n\) on myös nollajono.

Parts 1 and 2. If \((a_n)_n\) is a zero sequence, then according to the definition there is an index \(n_0 \in \mathbb{N}\), such that \(|a_n|<\varepsilon\) for every \(n\geq n_0\) and an arbitrary \(\varepsilon\in\mathbb{R}\). But then we have \(|b_n|\leq|a_n|<\varepsilon\); this proves parts 1 and 2 are correct.

Part 3. If \(c=0\), then the result is trivial. Let \(c\neq0\) and choose \(\varepsilon > 0\) such that \[\begin{aligned}|a_n|<\frac{\varepsilon}{|c|}\end{aligned}\] for all \(n\geq n_0\). Rearranging we get: \[\begin{aligned} |c|\cdot|a_n|=|c\cdot a_n|<\varepsilon\end{aligned}\]

Part 4.

Because \((a_n)_n\) is a zero sequence, by the definition we have \(|a_n|<\frac{\varepsilon}{2}\) for all \(n\geq n_0\). Analogously, for the zero sequence \((b_n)_n\) there is a \(m_0 \in \mathbb{N}\) with \(|b_n|<\frac{\varepsilon}{2}\) for all \(n\geq m_0\).

Then for all \(n > \max(n_0,m_0)\) it follows (using the triangle inequality) that: \[\begin{aligned}|a_n + b_n|\leq|a_n|+|b_n|<\frac{\varepsilon}{2}+\frac{\varepsilon}{2} = \varepsilon\end{aligned}\]

\(\square\)

Suppeneminen ja hajaantuminen

Nollajonoja voidaan käyttää tutkimaan jonojen suppenemista yleisemmin:

Määritelmä: Suppeneminen ja hajaantuminen

Jono \((a_{n})_{n}\) suppenee kohti raja-arvoa \(a\in\mathbb{R}\), jos jokaista \(\varepsilon>0\) vastaa sellainen \(n_{0}\), että \[|a_{n}-a| \lt \varepsilon \text{ kaikille niille }n\in\mathbb{N}_{0},\text{ joille }n\geq n_{0}.\]

Tämän kanssa on yhtäpitävää:

Jono \((a_{n})_{n}\) suppenee kohti raja-arvoa \(a\in\mathbb{R}\), jos \((a_{n}-a)_{n}\) on nollajono.

Esimerkki 4.

Tarkastellaan jonoa \((a_n)_n\), jossa \[a_n=\frac{2n^2+1}{n^2+1}.\] Laskemalla jonon termejä suurilla \(n\), huomataan, että ilmeisesti \( a_n\to 2\), kun \(n \to \infty\), joten jonon raja-arvo voisi olla \(a=2\).

For a vigorous proof, we show that for every \(\varepsilon > 0\) there exists an index \(n_0\in\mathbb{N}\), such that for every term \(a_n\) with \(n>n_0\) the following relationship holds: \[\left| \frac{2n^2+1}{n^2+1} - 2\right| < \varepsilon.\]

Firstly we estimate the inequality: \[\begin{aligned}\left|\frac{2n^2+1}{n^2+1}-2\right| =&\left|\frac{2n^2+1-2\cdot\left(n^2+1\right)}{n^2+1}\right| \\ =&\left|\frac{2n^2+1-2n^2-2}{n^2+1}\right| \\ =&\left|-\frac{1}{n^2+1}\right| \\ =&\left|\frac{1}{n^2+1}\right| \\ <&\frac{1}{n}.\end{aligned}\]

Now, let \(\varepsilon > 0\) be an arbitrary constant. We then choose the index \(n_0\in\mathbb{N}\), such that \[n_0 > \frac{1}{\varepsilon} \text{, or equivalently, } \frac{1}{n_0} < \varepsilon.\] Finally from the above inequality we have: \[\left|\frac{2n^2+1}{n^2+1}-2\right| < \frac{1}{n} < \frac{1}{n_0} < \varepsilon,\] Thus we have proven the claim and so by definition \(a=2\) is the limit of the sequence.

\(\square\)

Jos jono suppenee, niin sillä voi olla vain yksi raja-arvo.

Lause: Raja-arvon yksikäsitteisyys

Oletetaan, että jono \((a_{n})_{n}\) suppenee kohti raja-arvoa \(a\in\mathbb{R}\) ja kohti raja-arvoa \(b\in\mathbb{R}\).

Silloin \(a=b\).

Assume \(a\ne b\); choose \(\varepsilon\in\mathbb{R}\) with \(\varepsilon:=\frac{1}{3}|a-b|.\) Then in particular \([a-\varepsilon,a+\varepsilon]\cap[b-\varepsilon,b+\varepsilon]=\emptyset.\)

Because \((a_{n})_{n}\) converges to \(a\), there is, according to the definition of convergence, a index \(n_{0}\in\mathbb{N}\) with \(|a_{n}-a|< \varepsilon\) for \(n\geq n_{0}.\) Furthermore, because \((a_{n})_{n}\) converges to \(b\) there is also a \(\widetilde{n_{0}}\in\mathbb{N}\) with \(|a_{n}-b|< \varepsilon\) for \(n\geq\widetilde{n_{0}}.\) For

\(n\geq\max\{n_{0},\widetilde{n_{0}}\}\) we have:

\[\begin{aligned}\varepsilon\ = &\ \frac{1}{3}|a-b| \Rightarrow\\

3\varepsilon\ = &\ |a-b|\\

= &\ |(a-a_{n})+(a_{n}-b)|\\

\leq &\ |a_{n}-a|+|a_{b}-b|\\

< &\ \varepsilon+\varepsilon=2\varepsilon,\end{aligned}\] Consequently we have obtained \(3\varepsilon\leq2\varepsilon\), which is a contradiction as \(\varepsilon>0\).

Therefore the assumption must be wrong, so \(a=b\).

\(\square\)

Määritelmä: Raja-arvo

Suppenevan jonon raja-arvolle käytetään merkintöjä\[a_{n}\rightarrow a,\text{ tai }\lim_{n\rightarrow\infty}a_{n}=a.\] Määritelmä on yksikäsitteinen yllä

olevan lauseen perusteella. Jos jonolla ei ole raja-arvoa, niin se hajaantuu.

Lause: Rajoitettu jono

Suppeneva jono \((a_n)_n\) on rajoitettu, t.s. on olemassa sellainen vakio \(C\in\mathbb{R}\) että

\[|a_n| \lt C\] kaikilla

\(n\in\mathbb{N}\).

We assume that the sequence \((a_n)_n\) has the limit \(a\). By the definition of convergence, we have that \(|a_n - a|<\varepsilon\) for all \(\varepsilon \in \mathbb{R}\) and \(n\geq n_0\). Choosing \(\varepsilon = 1\) gives:

\[\begin{aligned}|a_n|-|a|&\ \leq |a_n -a| \\

&\ < 1,\end{aligned}\] And therefore also \(|a_n|\leq |a|+1\).

Thus for all \(n\in \mathbb{N}\): \[|a_n|\leq \max \left\{ |a_1|,|a_2|,\ldots,|a_{n_0}|,|a|+1 \right\}=:r\]

\(\square\)

Suppenevien jonojen ominaisuuksia

Lause: Osajono

Olkoon \((a_{n})_{n}\) suppeneva jono, jolle \(a_{n}\rightarrow a\) ja olkoon \((a_{\varphi(n)})_{n}\) jonon \((a_{n})_{n}\) osajono. Silloin \((a_{\varphi(n)})_{n}\rightarrow a\).

Sanallisesti: Suppenevan jonon kaikki osajonot suppenevat kohti alkuperäisen jonon raja-arvoa.

By the definition of a subsequence \(\varphi(n)\geq n\). Because \(a_{n}\rightarrow a\) it is implicated that \(|a_{n}-a|<\varepsilon\) for \(n\geq n_{0}\), therefore \(|a_{\varphi(n)}-a|<\varepsilon\) for these indices \(n\).

\(\square\)

Lause: Laskusääntöjä

Olkoot \((a_{n})_{n}\) ja \((b_{n})_{n}\) suppenevia jonoja, joille \(a_{n}\rightarrow a\) ja \(b_{n}\rightarrow b\). Silloin kaikille \(\lambda, \mu \in \mathbb{R}\) pätee:

-

\(\lambda \cdot (a_n)+\mu \cdot (b_n) \to \lambda \cdot a + \mu \cdot b\)

-

\((a_n)\cdot (b_n) \to a\cdot b\)

Sanallisesti: Suppenevien jonojen summat ja tulot ovat suppenevia jonoja.

Part 1. Let \(\varepsilon > 0\). We must show, that for all \(n \geq n_0\) it follows that: \[|\lambda \cdot a_n + \mu \cdot b_n - \lambda \cdot a - \mu \cdot b| < \varepsilon.\] The left hand side we estimate using: \[|\lambda (a_n-a)+\mu (b_n - b)| \leq |\lambda|\cdot|a_n-a|+|\mu|\cdot|b_n-b|.\]

Because \((a_n)_n\) and \((b_n)_n\) converge, for each given \(\varepsilon > 0\) it holds true that: \[\begin{aligned}|a_n - a| <\ \varepsilon_1 := &\ \textstyle \frac{\varepsilon}{2|\lambda|} \text{ for all }n\geq n_0\\ |b_n - b| <\ \varepsilon_2 := &\ \textstyle \frac{\varepsilon}{2|\mu|} \text{ for all }n\geq n_1\end{aligned}\]

Therefore \[\begin{aligned}|\lambda|\cdot|a_n-a|+|\mu|\cdot|b_n-b| < &\ |\lambda|\varepsilon_1 + |\mu|\varepsilon_2 \\ = &\ \textstyle{ \frac{\varepsilon}{2} + \frac{\varepsilon}{2} } = \varepsilon\end{aligned}\] for all numbers \(n \geq \max \{n_0,n_1\}\). Therefore the sequence \[\left( \lambda \left( a_n - a \right) + \mu \left( b_n - b \right) \right)_n\] is a zero sequence and the desired inequality is shown.

Part 2. Let \(\varepsilon > 0\). We have to show, that for all \(n > n_0\) \[|a_n b_n - a b| < \varepsilon.\] Furthermore an estimation of the left hand side follows: \[\begin{aligned} |a_n b_n - a b| =&\ |a_n b_n - a b_n + a b_n - ab| \\ \leq &\ |b_n|\cdot|a_n-a| + |a|\cdot|b_n - b|.\end{aligned}\] We choose a number \(B\), such that \(|b_n| \lt b\) for all \(n\) and \(|a| \lt b\). Such a value of \(B\) exists by the Theorem of convergent sequences being bounded. We can then use the estimation: \[\begin{aligned}|b_n|\cdot|a_n-a| + |a|\cdot|b_n - b| <&\ B \cdot \left(|a_n - a| + |b_n - b| \right).\end{aligned}\] For all \(n>n_0\) we have \(|a_n - a|<\frac{\varepsilon}{2\cdot B}\) and \(|b_n - b|<\frac{\varepsilon}{2\cdot B}\), and - putting everything together - the desired inequality it shown.

\(\square\)

2. Sarjat

Suppeneminen

Suppeneminen

Jonosta \((a_k)\) voidaan muodostaa sen osasummia asettamalla \[s_n =a_1+a_2+\dots+a_n.\]

Jos osasumminen jonolla \((s_n)\) on raja-arvo \(s\in \mathbb{R}\), niin luvuista \((a_k)\) muodostettu sarja suppenee ja sen summa on \(s\). Tällöin merkitään \[ a_1+a_2+\dots =\sum_{k=1}^{\infty} a_k = \lim_{n\to\infty}\underbrace{\sum_{k=1}^{n} a_k}_{=s_{n}} = s. \]

Indeksöinti

Osasummat kannattaa indeksöidä samalla tavalla kuin alkuperäinen jono \((a_k)\); esimerkiksi jonon \((a_k)_{k=0}^{\infty}\) osasummat ovat \(s_0= a_0, s_1=a_0+a_1\) jne.

Sarjaan voidaan tehdä indeksinsiirtoja ilman että varsinainen sarja muuttuu: \[\sum_{k=1}^{\infty} a_k =\sum_{k=0}^{\infty} a_{k+1} = \sum_{k=2}^{\infty} a_{k-1}.\]

Konkreettinen esimerkki: \[\sum_{k=1}^{\infty} \frac{1}{k^2}=1+\frac{1}{4}+\frac{1}{9}+\dots= \sum_{k=0}^{\infty} \frac{1}{(k+1)^2}\]

Kokeile!

Laske sarjan \(\displaystyle\sum_{k=0}^{\infty}a_{k}\) osasummia:

sarjan \(k.\) termi: , aloita summaus kohdasta

Sarjan hajaantuminen

Sarja, joka ei suppene, on hajaantuva. Tämä voi tapahtua kolmella eri tavalla:

- sarjan osasummat kasvavat rajatta kohti ääretöntä

- sarjan osasummat pienenevät rajatta kohti miinus ääretöntä

- osasummien jono heilahtelee niin, ettei sillä ole raja-arvoa.

Hajaantuvan sarjan kohdalla merkintä \(\displaystyle\sum_{k=1}^{\infty} a_k\) ei oikeastaan tarkoita mitään (se ei ole reaaliluku). Tällöin voidaan tulkita, että merkintä tarkoittaa osasummien jonoa, joka on aina hyvin määritelty.

Perustuloksia

Geometrinen sarja

Geometrinen sarja \[\sum_{k=0}^{\infty} aq^k\] suppenee, jos \(|q|<1\) (tai \(a=0\)), jolloin sen summa on \(\frac{a}{1-q}\). Jos \(|q|\ge 1\), niin sarja hajaantuu.

Todistus. Osasummille on voimassa

\[\sum_{k=0}^{n} aq^k =\frac{a(1-q^{n+1})}{1-q},\] josta väite seuraa.

\(\square\)

Yleisemmin \[\sum_{k=i}^{\infty} aq^k = \frac{aq^i}{1-q} = \frac{\text{sarjan 1. termi}}{1-q},\text{ jos } |q|<1.\]

Esimerkki 1.

Määritä sarjan \[\sum_{k=1}^{\infty}\frac{3}{4^{k+1}}\] summa.

Ratkaisu. Koska \[\frac{3}{4^{k+1}} = \frac{3}{4}\cdot \left( \frac{1}{4}\right)^k,\] niin kyseessä on geometrinen sarja. Sen summa on \[\frac{3}{4}\cdot \frac{1/4}{1-1/4} = \frac{1}{4}.\]

Laskusääntöjä

Suppenevien sarjojen ominaisuuksia:

- \(\displaystyle{\sum_{k=1}^{\infty} (a_k+b_k) = \sum_{k=1}^{\infty} a_k + \sum_{k=1}^{\infty} b_k}\)

- \(\displaystyle{\sum_{k=1}^{\infty} (c\, a_k) = c\sum_{k=1}^{\infty} a_k}\), kun \(c\in \mathbb{R}\) on vakio

Todistus. Nämä seuraavat vastaavista tuloksista jonojen raja-arvolle.

\(\square\)

Huomautus: Sarjoilla ei ole jonojen kaltaista tulosääntöä, koska jo kahden termin summille \[(a_1+a_2)(b_1+b_2) \neq a_1b_1 +a_2b_2.\] Tulosäännön oikea muoto on sarjojen Cauchy-tulo, jossa myös ristitermit otetaan huomioon.

Katso esimerkiksi https://en.wikipedia.org/wiki/Cauchy_product

Lause 1.

Jos sarja \(\displaystyle{\sum_{k=1}^{\infty} a_k}\) suppenee, niin \[\displaystyle{\lim_{k\to \infty} a_k =0}.\]

Kääntäen: Jos \[\displaystyle{\lim_{k\to \infty} a_k \neq 0},\] niin sarja \(\displaystyle{\sum_{k=1}^{\infty} a_k}\) hajaantuu.

Todistus.

Jos sarjan summa on \(s\), niin \(a_k=s_k-s_{k-1}\to s-s=0\).

\(\square\)

Huomautus: Ominaisuutta \(\lim_{k\to \infty} a_k = 0\) ei voi käyttää sarjan suppenemisen osoittamiseen; vrt. seuraavat esimerkit. Tämä on eräs yleisimmistä päättelyvirheistä sarjojen kohdalla!

Esimerkki

Tutki sarjan \[\sum_{k=1}^{\infty} \frac{k}{k+1} = \frac{1}{2}+\frac{2}{3}+\frac{3}{4}+\dots\] suppenemista.

Ratkaisu. Sarjan yleisen termin raja-arvo on \[\lim_{k\to\infty}\frac{k}{k+1} = 1.\] Koska raja-arvo ei ole nolla, niin sarja hajaantuu.

Harmoninen sarja

Harmoninen sarja \[\sum_{k=1}^{\infty} \frac{1}{k} = 1+\frac{1}{2}+\frac{1}{3}+\dots\] hajaantuu, vaikka yleisen termin \(a_k=1/k\) raja-arvo on nolla.

Tämän klassisen tuloksen todisti ensimmäisenä 14. vuosisadalla Nicole Oresme, jonka jälkeen monia muitakin perusteluja on keksitty. Tässä esimerkkinä kaksi erilaista päättelyä.

i) Alkeellinen todistus. Oletetaan, että sarja suppenee ja yritetään johtaa tästä ristiriita. Olkoon siis \(s\in\mathbb{R}\) harmonisen sarjan summa: \(s = \sum_{k=1}^{\infty}1/k\). Tällöin

\[

s = \left(\color{#4334eb}{1} + \color{#eb7134}{\frac{1}{2}}\right) + \left(\color{#4334eb}{\frac{1}{3}} +

\color{#eb7134}{\frac{1}{4}}\right) + \left(\color{#4334eb}{\frac{1}{5}} + \color{#eb7134}{\frac{1}{6}}\right) + \dots

= \sum_{k=1}^{\infty}\left(\color{#4334eb}{\frac{1}{2k-1}} + \color{#eb7134}{\frac{1}{2k}}\right).

\] Selvästi

\[

\color{#4334eb}{\frac{1}{2k-1}} > \color{#eb7134}{\frac{1}{2k}} > 0 \text{ kaikille }k\ge 1~\Rightarrow~\sum_{k=1}^{\infty}\color{#4334eb}{\frac{1}{2k-1}} > \sum_{k=1}^{\infty}\color{#eb7134}{\frac{1}{2k}} = \frac{s}{2},

\] joten \[

s = \sum_{k=1}^{\infty}\color{#4334eb}{\frac{1}{2k-1}} + \sum_{k=1}^{\infty}\color{#eb7134}{\frac{1}{2k}} = \sum_{k=1}^{\infty}\color{#4334eb}{\frac{1}{2k-1}} + \frac{1}{2}\underbrace{\sum_{k=1}^{\infty}\frac{1}{k}}_{=s}.

\]

\[

= \sum_{k=1}^{\infty}\color{#4334eb}{\frac{1}{2k-1}} + \frac{s}{2} > \sum_{k=1}^{\infty}\color{#eb7134}{\frac{1}{2k}} + \frac{s}{2} = \frac{s}{2} + \frac{s}{2} = s.

\] Päädyimme siis epäyhtälöön \(s>s\), joka on ristiriita. Alkuperäinen oletus suppenemisesta on siis väärä, joten sarja hajaantuu.

\(\square\)

ii) Todistus integraalin avulla: Pylvään korkeuksia \(1/k\) vastaavan histogrammin alapuolelle jää funktion \(f(x)=1/(x+1)\) kuvaaja, joten pinta-aloja vertaamalla saadaan

\[\sum_{k=1}^{n} \frac{1}{k} \ge \int_0^n\frac{dx}{x+1} =\ln(n+1)\to\infty, \] kun \(n\to\infty\).

\(\square\)

Positiiviset sarjat

Sarjan summan laskeminen on usein vaikeata ja monesti jopa mahdotonta, jos vaatimuksena on summan eksplisiittinen lauseke. Moniin sovelluksiin riittää myös summan likiarvo, mutta sitä ennen olisi syytä selvittää, onko sarja suppeneva vai hajaantuva.

Sarja \(\displaystyle{\sum_{k=1}^{\infty} p_k}\) on positiivinen (tai positiiviterminen), jos \(p_k > 0\) kaikilla \(k\).

Positiivisten sarjojen suppeneminen on hyvin selväpiirteistä:

Lause 2.

Positiivinen sarja suppenee täsmälleen silloin, kun sen osasummien jono on ylhäältä rajoitettu.

Syy: Osasummien jono on nouseva.

Esimerkki

Osoita, että superharmonisen sarjan \[\sum_{k=1}^{\infty}\frac{1}{k^2}\] osasummille pätee \(s_n<2\) kaikilla \(n\), joten sarja suppenee.

Ratkaisu. Ratkaisu perustuu epäyhtälöön \[\frac{1}{k^2} < \frac{1}{k(k-1)} = \frac{1}{k-1}-\frac{1}{k},\] kun \(k\ge 2\), koska sen mukaan \[\sum_{k=1}^n\frac{1}{k^2} < 1+ \sum_{k=2}^n\frac{1}{k(k-1)} =2-\frac{1}{n}< 2\] kaikilla \(n\ge 2\).

Tämän päättelyn voi tehdä myös integraalin avulla.

Leonhard Euler selvitti vuonna 1735, että sarjan summa on \(\pi^2/6\). Perusteluna hän käytti sinifunktion sarja- ja tulokehitelmien vertailua.

Itseinen suppeneminen

Määritelmä

Sarja \(\displaystyle{\sum_{k=1}^{\infty} a_k}\) suppenee itseisesti, jos positiivinen sarja \(\sum_{k=1}^{\infty} |a_k|\) suppenee.

Lause 3.

Itseisesti suppeneva sarja suppenee (tavallisessa mielessä) ja \[\left| \sum_{k=1}^{\infty} a_k \right| \le \sum_{k=1}^{\infty} |a_k|.\]

Tämä on erikoistapaus majoranttiperiaatteesta, josta lisää myöhemmin.

Todistus.

Oletetaan, että \(\sum_k |a_k|\) suppenee. Tarkastellaan erikseen sarjan \(\sum_k a_k\) positiivista ja negatiivista osaa: Olkoon \[b_k=\max (a_k,0)\ge 0 \text{ ja } c_k=-\min (a_k,0)\ge 0.\] Koska \(b_k,c_k\le |a_k|\), niin positiiviset sarjat \(\sum b_k\) ja \(\sum c_k\) suppenevat lauseen 2 perusteella. Lisäksi \(a_k=b_k-c_k\), joten \(\sum a_k\) suppenee kahden suppenevan sarjan erotuksena.

\(\square\)

Esimerkki

Tutki vuorottelevan (= etumerkit vaihtelevat vuorotellen + ja -) sarjan \[\sum_{k=1}^{\infty}\frac{(-1)^{k+1}}{k^2}=1-\frac{1}{4}+\frac{1}{9}-\dots\] suppenemista.

Ratkaisu. Koska \[\displaystyle{\left| \frac{(-1)^{k+1}}{k^2}\right| =\frac{1}{k^2}}\] ja superharmoninen sarja \[\sum_{k=1}^{\infty}\frac{1}{k^2}\] suppenee, niin tutkittava sarja suppenee itseisesti, ja sen vuoksi myös tavallisessa mielessä.

Vuorotteleva harmoninen sarja

Itseinen suppeneminen ei kuitenkaan tarkoita samaa kuin tavallinen suppeneminen:

Esimerkki

Vuorotteleva harmoninen sarja \[\sum_{k=1}^{\infty}\frac{(-1)^{k+1}}{k} = 1-\frac{1}{2}+\frac{1}{3}-\frac{1}{4}+\dots\] suppenee, mutta ei itseisesti.

(Idea) Piirretään osasummajonon \((s_n)\) kuvaaja, josta huomataan, että parillisten ja parittomien indeksien osasummat \(s_{2n}\) ja \(s_{2n+1}\) ovat monotonisia ja suppenevat kohti samaa raja-arvoa.

Sarjan summa on \(\ln 2\), joka saadaan selville integroimalla geometrisen sarjan summakaavaa.

pisteet on yhdistetty janoilla havainnollisuuden vuoksi

Suppenemistestejä

Vertailutestit

Edelliset tarkastelut yleistyvät seuraavalla tavalla:

Lause 4.

(Majoranttiperiaate) Jos \(|a_k|\le p_k\) kaikilla \(k\) ja

\(\sum_{k=1}^{\infty} p_k\) suppenee, niin myös \(\sum_{k=1}^{\infty} a_k\) suppenee.

(Minoranttiperiaate) Jos \(0\le p_k \le a_k\) kaikilla \(k\) ja \(\sum p_k\) hajaantuu, niin myös \(\sum a_k\) hajaantuu.

Majorantin todistus. Koska \[a_k=|a_k|-(|a_k|-a_k)\] ja \[0\le |a_k|-a_k \le 2|a_k|,\] niin \(\sum a_k\) on suppeneva kahden suppenevan sarjan erotuksena. Tässä käytetään aikaisempaa lausetta 2 positiivisille sarjoille; kyseessä ei ole kehäpäättely!

Minorantin todistus. Oletuksista seuraa, että sarjan \(\sum a_k\) osasummat kasvavat rajatta, joten sarja hajaantuu.

\(\square\)

Esimerkki

Tutki sarjojen \[

\sum_{k=1}^{\infty} \frac{1}{1+k^3} \ \text{ ja }\

\sum_{k=1}^{\infty} \frac{1}{\sqrt{k}}

\] suppenemista.

Ratkaisu. Koska\[0<\frac{1}{1+k^3} < \frac{1}{k^3}\le \frac{1}{k^2}\] kaikilla \(k\in \mathbb{N}\), niin ensimmäinen sarja suppenee majoranttiperiaatteen nojalla.

Toisaalta \[\displaystyle{\frac{1}{\sqrt{k}}\ge \frac{1}{k}}\] kaikilla \(k\in\mathbb{N}\), joten toisella sarjalla on hajaantuva harmoninen minorantti, joten se hajaantuu.

Suhdetesti

Käytännössä eräs parhaista tavoista tutkia suppenemista on suhdetesti, jossa sarjan peräkkäisten termien käyttäytymistä verrataan sopivaan geometriseen sarjaan:

Lause 5a.

Oletetaan, että on olemassa sellainen vakio \(0< Q < 1\), että \[ \left| \frac{a_{k+1}}{a_k} \right| \le Q\] jostakin indeksistä \(k\ge k_0\) alkaen.

Tällöin sarja \(\sum a_k\) suppenee (ja sen "suppenemisnopeus"\ on samaa luokkaa kuin geometrisella sarjalla \(\sum Q^k\), tai jopa parempi).

Koska sarjan alkuosa ei vaikuta suppenemiseen (mutta se vaikuttaa toki summaan!), niin voidaan olettaa epäyhtälön pätevän kaikilla indekseillä \(k\).

Tästä seuraa, että \[|a_{k}|\le Q|a_{k-1}|\le Q^2|a_{k-2}|\le \dots\le Q^k|a_0|,\] joten sarjalla on geometrinen majorantti, ja se suppenee.

\(\square\)

Suhdetestin raja-arvomuoto

Lause 5b.

Oletetaan, että raja-arvo \[\lim_{k\to \infty} \left| \frac{a_{k+1}}{a_k} \right| = q\] on olemassa. Silloin sarja \(\sum a_k\) \[ \begin{cases}\text{suppenee,} & \text{ jos } 0\le q< 1,\\ \text{hajaantuu,} & \text{ jos } q > 1,\\ \text{voi olla suppeneva tai haantuva,} & \text{ jos } q=1. \end{cases} \]

(Idea) Geometriselle sarjalle kahden peräkkäisen termin suhde on täsmälleen \(q\). Suhdetestin mukaan muidenkin sarjojen suppenemista voidaan (usein) tutkia samalla periaatteella, kun suhdeluku \(q\) korvataan tällä raja-arvolla.

Valitaan raja-arvon määritelmässä \(\varepsilon =(1-q)/2>0\). Silloin jostakin indeksistä \(k\ge k_{\varepsilon}\) alkaen pätee \[ |a_{k+1}/a_k| < q + \varepsilon = (q+1)/2 = Q < 1, \] ja väite seuraa lauseesta 4.

Tapauksessa \(q>1\) sarjan yleinen termi ei lähesty nollaa, joten sarja hajaantuu.

Viimeinen tapaus \(q=1\) ei sisällä mitään informaatiota (eikä myöskään todistettavaa).

Tämä tapaus esiintyy sekä harmonisen (\(a_k=1/k\), hajaantuu!) että yliharmonisen (\(a_k=1/k^2\), suppenee!) sarjan kohdalla. Näissä tapauksissa suppenemista täytyy tutkia joillakin muilla menetelmillä, kuten aikaisemmin tehtiin.

\(\square\)

Esimerkki

Onko sarja \[\sum_{k=1}^{\infty}\frac{(-1)^{k+1}k}{2^k}= \frac{1}{2}-\frac{2}{4}+\frac{3}{8}-\dots\] suppeneva?

Ratkaisu. Tässä \(a_k=(-1)^{k+1}k/2^k\), joten \[ \left| \frac{a_{k+1}}{a_k}\right| = \left| \frac{(-1)^{k+2}(k+1)/2^{k+1}}{(-1)^{k+1}k/2^k}\right| =\frac{k+1}{2k} =\frac{1}{2}+\frac{1}{2k}\to \frac{1}{2} < 1, \] kun \(k\to\infty\). Suhdetestin perusteella sarja suppenee.

3. Continuity

Table of Content

In this section we define a limit of a function \(f\colon S\to \mathbb{R}\) at a point \(x_0\). It is assumed that the reader is already familiar with limit of a sequence, the real line and the general concept of a function of one real variable.

Limit of a function

For a subset of real numbers, denoted by \(S\), assume that \(x_0\) is such point that there is a sequence of points \((x_k)\in S\) such that \(x_k\to x_0\) as \(k\to \infty\). Here the set \(S\) is often the set of all real numbers, but sometimes an interval (open or closed).

Example 1.

Note that it is not necessary for \(x_0\) to be in \(S\). For example, the sequence \(x_k = 1/k\to 0\) as \(k\to \infty\) in \(S=]0,2[\), and \(x_k\in S\) for all \(k=1,2,\ldots\) but \(0\) is not in \(S\).

Limit of a function

We consider a function \(f\) defined in the set \(S\). Then we define the limit of the function \(f\colon S\to \mathbb{R}\) at \(x_0\) as follows.

Definition 1: Limit of a function

Suppose that \(S\subset \mathbb{R}\) and \(f\colon S\to \mathbb{R}\) is a function. Then we say that \(f\) has a limit \(y_{0}\) at \(x_{0}\), and write \[\lim_{x \to x_{0}}f(x)=y_{0},\] if, \(f(x_{k})\to y_{0}\) as \(k\to \infty\) for every sequence \((x_{k})\) in \(S\setminus\{x_0\}\), such that \(x_{k}\to x_{0}\) as \(k\to \infty\).

Example 2.

The function \(f\colon \mathbb{R} \to \mathbb{R}\) defined by \(f(x)=x^2\) has a limit \(0\) at the point \(x=0\).

Function \(y=x^2\).

Example 3.

The function \(g\colon\mathbb{R}\to \mathbb{R}\) defined by \[g(x)= \left\{\begin{array}{rl}0 & \text{ for }x<0, \\ 1 & \text{ for }x\ge 0.\end{array}\right.\] does not have a limit at the point \(x=0\). To formally prove this, take sequences \((x_k)\), \((y_k)\) defined by \(x_k=1/k\) and \(y_k=-1/k\) for \(k=1,2,\ldots\). Then the both sequences are in \(S=\mathbb{R}\), but \(f(x_k)=1\) and \(f(y_k)=0\) for any \(k\).

Function \[g(x)= \left\{\begin{array}{rl}0 & \text{ for }x<0, \\ 1 & \text{ for }x\ge 0.\end{array}\right.\]

Example 4.

The function \(f(x)=x \sin(1/x)\), \(x>0\) does have the limit \(0\) at \(0\).

Function \(y=x\sin(1/x)\) for \(x>0\).

Example 5.

The function \(g(x)= \sin(1/x)\), \(x>0\) does not have a limit at \(0\).

Function \(y=\sin(1/x)\) for \(x>0\).

One-sided limits

An important property of limits is that they are always unique. That is, if \(\lim_{x\to x_0} f(x)=a\) and \(\lim_{x\to x_0} f(x)=b\), then \(a=b\). Although a function may have only one limit at a given point, it is sometimes useful to study the behavior of the function when \(x_k\) approaches the point \(x_0\) from the left or the right side. These limits are called the left and the right limit of the function \(f\) at \(x_0\), respectively.

Definition 2: One-sided limits

Suppose \(S\) is a set in \(\mathbb{R}\) and \(f\) is a function defined on the set \(S\setminus\{x_0\}\). Then we say that \(f\) has a left limit \(y_{0}\) at \(x_{0}\), and write \[\lim_{x \to x_{0}-}f(x)=y_{0},\] if, \(f(x_{k})\to y_{0}\) as \(k\to \infty\) for every sequence \((x_{k})\) in the set \(S\cap ]-\infty,x_0[ =\{ x\in S : x < x_0 \}\), such that \(x_{k}\to x_{0}\) as \(k\to \infty\).

Similarly, we say that \(f\) has a right limit \(y_{0}\) at \(x_{0}\), and write \[\lim_{x \to x_{0}+}f(x)=y_{0},\] if, \(f(x_{k})\to y_{0}\) as \(k\to \infty\) for every sequence \((x_{k})\) in the set \(S\cap ]x_0,\infty[ =\{ x\in S : x_0 < x \}\), such that \(x_{k}\to x_{0}\) as \(k\to \infty\).

Theorem 1: Limit of a function

A function \(f\colon S\to \mathbb{R}\) has a limit \(y_0\) at the point \(x_0\) if and only if \[\lim_{x \to x_{0}-}f(x)= \lim_{x \to x_{0}+}f(x)=y_{0}.\]

Example 6.

The sign function \[\mathrm{sgn}(x)= \frac{x}{|x|}\] is defined on \(S= \mathbb{R}\setminus 0\). Its left and right limits at \(0\) are \[\lim_{x\to 0-} \mathrm{sgn}(x)= -1,\qquad \lim_{x\to 0+} \mathrm{sgn}(x)= 1.\] However, the function \(\mathrm{sgn}(x)\) does not have a limit at \(0\).

Function \(y = \frac{x}{|x|}\).

Example 7.

Function \(f: \mathbb{R}\setminus 0 \to \mathbb{R}\) \[f(x) = \frac{1}{x}\] does not have one-sided limits at 0.

Limit rules

The following limit rules are immediately obtained from the definition and basic algebra of real numbers.

Theorem 2: Limit rules

Let \(c\in \mathbb{R}, \lim_{x\to x_{0}} f(x)=a\) and \(\lim_{x\to x_{0}} g(x)=b.\) Then

- \(\lim_{x\to x_{0}} (cf)(x)=ca\),

- \(\lim_{x\to x_{0}} (f+g)(x)=a+b\),

- \(\lim_{x\to x_{0}} (fg)(x)=ab\),

- \(\lim_{x\to x_{0}} (f/g)(x)=a/b \ (\text{if} \ b \neq 0)\).

Example 8.

Finding limits by calculating \(f(x_0)\):

a) \[\lim_{x\to 2}(5x-3)=10-3=7.\]

b) \[\lim_{x\to -2}\frac{3x+2}{x+5} = \frac{-6+2}{-2+5}=-\frac{4}{3}.\]

c) \[\lim_{x\to 2} \frac{x^2-4}{x-2} = \lim_{x\to 2} \frac{(x+2)(x-2)}{x-2} = \lim_{x\to 2}(x+2) = 4.\]

Limits and continuity

In this section, we define continuity of the function. The intutive idea behind continuity is that the graph of a continuous function is a connected curve. However, this is not sufficient as a mathematical definition for several reasons. For example, by using this definition, one cannot easily decide if \(\tan(x)\) is a continuous function or not.

For continuity of a function \(f\) at a given point \(x_0\), it is required that:

\(f(x_0)\) is defined,

\(\lim_{x \to x_0} f(x)\) exists (and is finite),

\(\lim_{x \to x_0} f(x) = f(x_0)\).

In other words:

Definition 2: Continuity

A function \(f\colon S\to \mathbb{R}\) is continuous at a point \(x_{0}\in S\), if \[\lim_{x\to x_{0}}f(x)=f(x_{0}).\] A function \(f\colon S\to \mathbb{R}\) is continuous, if it is continuous at every point \(x_{0}\in S\).

Example 1.

Let \(c\in \mathbb{R}\). Functions \(f,g,h\) defined by \(f(x)=c\), \(g(x)=x\), \(h(x)=|x|\) are continuous at every point \(x\in \mathbb{R}\).

Why? If \(x_{k}\to x_{0}\), then \(f(x_{k})=c\) and \(\lim_{k\to \infty}f(x_k)= c=f(x_{0})\). For \(g\), we have \(g(x_{k})=x_{k}\) and hence, \(\lim_{k\to\infty} g(x_k)=x_{0}=g(x_{0})\). Similarly, \(h(x_{k})=|x_{k}|\) and \(\lim_{k\to\infty}h(x_k)= |x_{0}|=h(x_{0})\).

Continuous functions \(y=c\), \(y=x\) and \(y=|x|\).

Example 2.

Let \(x_{0}\in \mathbb{R}\). We define a function \(f\colon\mathbb{R}\to \mathbb{R}\) by \[f(x)= \left\{\begin{array}{rl}2 & \text{ for }x \lt x_{0}, \\ 3 & \text{ for }x\geq x_{0}.\end{array}\right.\] Then \[\lim_{x \to x_{0}^{-}}f(x)=2,\text{ and } \lim_{x \to x_{0}^{+}}f(x)=3.\] Therefore \(f\) is not continuous at the point \(x_{0}\).

Some basic properties of continuous functions of one real variable are given next. From the limit rules (Theorem 2) we obtain:

Theorem 3.

The sum, the product and the difference of continuous functions are continuous. Then, in particular, polynomials are continuous functions. If \(f\) and \(g\) are polynomials and \(g(x_{0})\neq 0\), then \(f/g\) is continuous at a point \(x_{0}\).

A composition of continuous functions is continuous if it is defined:

Theorem 4.

Let \(f\colon \mathbb{R}\to\mathbb{R}\) and \(g\colon \mathbb{R}\to \mathbb{R}\). Suppose that \(f\) is continuous at a point \(x_{0}\) and \(g\) is continuous at \(f(x_{0})\). Then \(g\circ f\colon \mathbb{R}\to \mathbb{R}\) is continuous at a point \(x_{0}\).

Note. If \(f\) is continuous, then \(|f|\) is continuous.

Why?

Write \(g(x):=|x|\). Then \((g\circ f)(x)=|f(x)|\).

Note. If \(f\) and \(g\) are continuous, then \(\max (f,g)\) and \(\min (f,g)\) are continuous. (Here \(\max (f,g)(x):=\max \{f(x),g(x)\}\).)

Why?

Write \[\begin{cases}(a+b)+|a-b|=2\max(a,b), \\ (a+b)-|a-b|=2\min(a,b). \end{cases} \]

\[\text{Function }f(x)= \left\{\begin{array}{rl}2 & \text{ for }x\lt x_{0}, \\ 3 & \text{ for }x\geq x_{0}. \end{array}\right.\]

Delta-epsilon definition

The so-called \((\varepsilon,\delta)\)-definition for continuity is given next. The basic idea behind this test is that, for a function \(f\) continuous at \(x_0\), the values of \(f(x)\) should get closer to \(f(x_0)\) as \(x\) gets closer to \(x_0\).

This is the standard definition of continuity in mathematics, because it also works for more general classes of functions than ones on this course, but it not used in high-school mathematics. This important definition will be studied in-depth in Analysis 1 / Mathematics 1.

\((\varepsilon,\delta)\)-test:

Theorem 5: \((\varepsilon,\delta)\)-definition

Let \(f: S\to \mathbb{R}\). Then the following conditions are equivalent:

- \(\lim_{x\to x_0} f(x)= y_0\),

- For all \(\varepsilon> 0\) there exists \(\delta >0\) such that if \(0 < |x-x_0| < \delta\), then \(|f(x) - y_0| <\varepsilon\) for all \(x\in S\).

Example 3.

From Theorem 3 we already know that the function \(f: \mathbb{R} \to \mathbb{R}\) defined by \(f(x) = 4x\) is continuous. We can also use the \((\varepsilon,\delta)\)-definition to prove this.

Proof. Let \(x_0 \in \mathbb{R}\) and \(\varepsilon > 0\). Now \[|f(x) - f(x_0)| = |4x - 4x_0| = 4|x - x_0| < \varepsilon,\] when \[|x - x_0| < \delta \text{, where } \delta = \frac{\varepsilon}{4}.\]

So for all \(\varepsilon > 0\) there exists \(\delta > 0\) such that if \(|x - x_0| < \delta\), then \(|f(x) - f(x_0)| < \varepsilon\) for all \(x \in \mathbb{R}\). Thus by

Theorem 5 \(\lim_{x \to x_0} f(x) = f(x_0)\) for all \(x_0 \in \mathbb{R}\) and by definition this means that the function \(f: \mathbb{R} \to \mathbb{R}\) is continuous.

\(\square\)

Interactivity. \((\varepsilon, \delta)\) in example 3.

Example 4.

Let \(x_{0}\in \mathbb{R}\). We define a function \(f\colon\mathbb{R}\to \mathbb{R}\) by \[f(x)= \left\{\begin{array}{rl}2 & \text{ for }x \lt x_{0}, \\ 3 & \text{ for }x \geq x_{0}.\end{array}\right.\] In Example 2 we saw that this function is not continuous at the point \(x_0\). To prove this using the \((\varepsilon,\delta)\)-test, we need to find some \(\varepsilon > 0\) and some \(x_\delta \in \mathbb{R}\) such that for all \(\delta > 0\), \(|x_\delta - x_0| < \delta\), but \(|f(x_\delta) - f(x_0)| > \varepsilon\).

Proof. Let \(\delta > 0\) and \(\varepsilon = 1/2\). By choosing \(x_\delta = x_0 - \delta /2\), we have

\[0 < |x_\delta-x_0| = |x_0 - \frac{\delta}{2} + x_0| = \frac{\delta}{2} < \delta,\]

and

\[|f(x_\delta) - f(x_0)| = |2 - 3| = 1 > \varepsilon.\]

Therefore by Theorem 5 \(f\) is not continuous at the point \(x_{0}\).

\(\square\)

Interactivity. \((\varepsilon, \delta)\) in example 4.

Properties of continuous functions

This section contains some fundamental properties of continuous functions. We start with the Intermediate Value Theorem for continuous functions, also known as Bolzano's Theorem. This theorem states that a function that is continuous on a given (closed) real interval, attains all values between its values at endpoints of the interval. Intuitively, this follows from the fact that the graph of a function defined on a real interval is a continuous curve.

Theorem 6: Intermediate Value Theorem

If \(f\colon [a,b]\to \mathbb{R}\) is continuous and \(f(a) \lt s \lt f(b)\), then there is at least one \(c\in ]a,b[\) such that \(f(c)=s\).

The Intermediate Value Theorem.

Example 1.

Let function \(f:\mathbb{R} \to \mathbb{R}\), where \[f(x) = x^5 - 3x - 1.\] Show that there is at least one \(c \in \mathbb{R}\) such that \(f(c) = 0\).

Solution. As a polynomial function, \(f\) is continuous. And because \[f(1) = 1^5 - 3 \cdot 1 - 1 = -3 < 0\] and \[f(-1) = (-1)^5 - 3 \cdot (-1) - 1 = 1 > 0,\] by the Intermediate Value Theorem there is at least one \(c \in ]-1, 1[\) such that \(f(c) = 0\).

Function \(f(x) = x^5 - 3x - 1\).

Example 2.

Let \(f(x)=x^3-x=x(x^2-1)=x(x-1)(x-1)\).

By the Intermediate Value Theorem we have \(f(x)<0\) for \(x<-1\) or \(0 \lt x \lt 1\). Similarly, \(f(x)>0\) for \(-1 \lt x \lt 0\) or \(1 \lt x\), because:

- \(f(x)=0\) if and only if \(x=0\) or \(x=\pm 1\), and

- \(f(-2)<0, f(-1/2)>0, f(1/2)<0\) and \(f(2)>0\).

Function \(f(x) = x^3 - x\).

Next we prove that a continuous function defined on a closed real interval is necessarily bounded. For this result, it is important that the interval is closed. A counter example for an open interval is given after the next theorem.

Theorem 7.

Let \(f\colon [a,b]\to \mathbb{R}\) be continuous. Then \(f\) is bounded.

Note. If \(f\colon ]a,b[\to \mathbb{R}\) is continuous, it can be unbounded.

Example 4.

Let \(f\colon ]0,1]\to \mathbb{R}\), where \(f(x)=1/x\). Now \[\lim_{x\to 0+}f(x)=\infty.\]

Theorem 8.

Let \(f\colon [a,b]\to \mathbb{R}\) be continuous. Then there exist points \(c,d\in [a,b]\) such that \(f(c)\leq f(x)\leq f(d)\) for all \(x\in [a,b]\), i.e. \(f(c)\) is minimum and \(f(d)\) is maximum of \(f\) on the interval \([a,b]\).

Function \(f(x) = 1/x\) for \(x > 0\).

Example 5.

Let \(f:[-1,2] \to \mathbb{R}\), where \[f(x) = -x^3 - x + 3.\] The domain of the function is \([-1,2]\). To determine the range of the function, we first notice that the function is decreasing. We will now show this.

Let \(x_1 < x_2\). Then \[x_{1}^3 < x_{2}^3\] and \[-x_{1}^3 > -x_{2}^3.\]

Because \(x_1 < x_2\), \[-x_1^3-x_1 > -x_2^3 -x_2\] and \[-x_1^3-x_1 +3 > -x_2^3 -x_2 +3.\] Thus, if \(x_1 < x_2\) then \(f(x_1) > f(x_2)\), which means that the function \(f\) is decreasing.

We know that a decreasing function has its minimum value in the right endpoint of the interval. Thus, the minimum value of \(f:[-1,2] \to \mathbb{R}\) is \[f(2) = -2^3 - 2 + 3 = -7.\] Respectively, a decreasing function has it's maximum value in the left endpoint of the interval and so the maximum value of \(f:[-1,2] \to \mathbb{R}\) is \[f(-1) = -(-1)^3 - (-1) + 3 = 5.\]

As a polynomial function, \(f\) is continuous and it therefore has all the values between it's minimum and maximum values. Hence, the range of \(f\) is \([-7, 5]\).

Function \(-x^3 - x + 3\) for \([-1, 2]\).

Example 6.

Suppose that \(f\) is a polynomial. Then \(f\) is continuous on \(\mathbb{R}\) and, by Theorem 7, \(f\) is bounded on every closed interval \([a,b]\), \(a \lt b\). Furthermore, by Theorem 3, \(f\) must have minimum and maximum values on \([a,b]\).

Note. Theorem 8 is connected to the Intermediate Value Theorem in the following way:

If \(f\colon [a,b]\to \mathbb{R}\) be continuous, then there exist points \(x_1,x_2\in [a,b]\) such that \(f([a,b])=[f(x_1),f(x_2)]\).

4. Derivative

Derivative

The definition of the derivative of a function is given next. We start with an example illustrating the idea behind the formal definition.

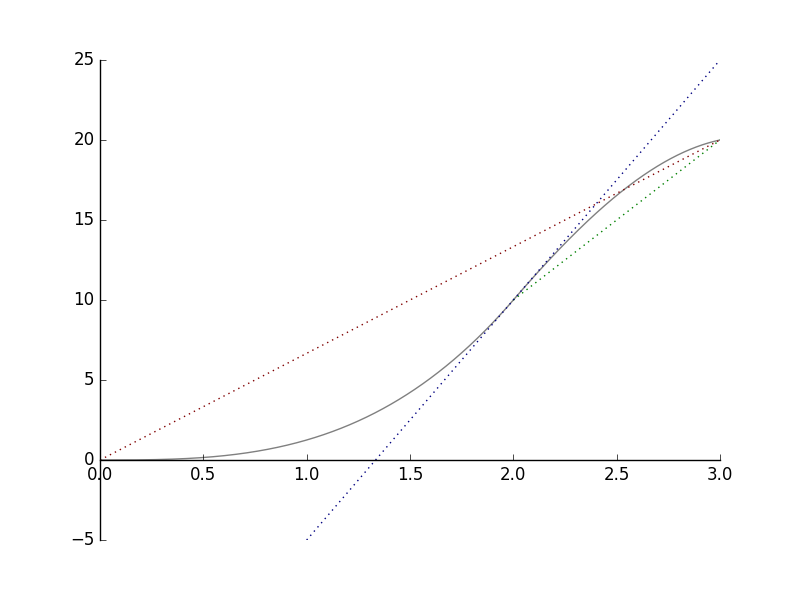

Example 0.

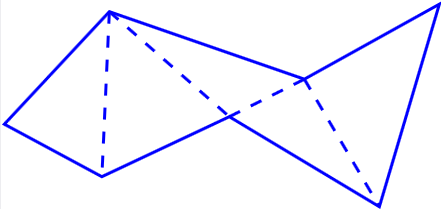

The graph below shows how far a cyclist gets from his starting point.

a) Look at the red line. We can see that in three hours, the cyclist moved \(20\)km. The average speed of the whole trip is \(6.6\) km/h.

b) Now look at the green line. We can see that during the third hour the cyclist moved \(10\)km further. That makes the average speed of that time interval \(10\) km/h.

Notice that the slope of the red line is \(20/3 \approx 6.6\) and that the slope of the blue line is \(10\). These are the same values as the corresponding average speeds.

c) Look at the blue line. It is the tangent of the curve at the point \(x=2h\). Using the same principle as with average speeds, we conclude that after two hours of the departure, the speed of the cyclist was \(30/2\) km/h \(= 15\) km/h.

Now we will proceed to the general definition:

Definition: Derivative

Let \((a,b)\subset \mathbb{R}\). The derivative of function \(f\colon (a,b)\to \mathbb{R}\) at the point \(x_0\in (a,b)\) is \[f'(x_0):=\lim_{h\to 0} \frac{f(x_0+h)-f(x_0)}{h}.\] If \(f'(x_0)\) exists, then \(f\) is said to be differentiable at the point \(x_0\).

Note: Since \(x = x_0+h\), then \(h=x-x_0\), and thus the definition can also be written in the form \[f'(x_0):=\lim_{x\to x_0} \frac{f(x)-f(x_0)}{x-x_0}.\]

The derivative can be denoted in different ways: \[ f'(x_0)=Df(x_0) =\left. \frac{df}{dx}\right|_{x=x_0}, \ \ f'=Df =\frac{df}{dx}. \]

Interpretation. Consider the curve \(y = f(x)\). Now if we draw a line through the points \((x_0,f(x_0))\) and \((x_0+h, f(x_0+h))\), we see that the slope of this line is \[\frac{f(x_0+h)-f(x_0)}{x_0+h-x_0} = \frac{f(x_0+h)-f(x_0)}{h}.\] When \(h \to 0\), the line intersects with the curve \(y = f(x)\) only in the point \((x_0, f(x_0))\). This line is the tangent of the curve \(y=f(x)\) at the point \((x_0,f(x_0))\) and its slope is \[\lim_{h\to 0} \frac{f(x_0+h)-f(x_0)}{h},\] which is the derivative of the function \(f\) at \( x_0\). Hence, the tangent is given by the equation \[y=f(x_0)+f'(x_0)(x-x_0).\]

Interactivity. Move the point of intersection and observe changes on the tangent line of the curve.

Example 1.

Let \(f\colon \mathbb{R} \to \mathbb{R}\) be the function \(f(x) = x^3 + 1\). The derivative of \(f\) at \(x_0 = 1\) is \[\begin{aligned}f'(1) &=\lim_{h \to 0} \frac{f(1+h)-f(1)}{h} \\ &=\lim_{h \to 0} \frac{(1+h)^3 + 1 - 1^3 - 1}{h} \\ &=\lim_{h \to 0} \frac{1+3h+3h^2+h^3-1}{h} \\ &=\lim_{h \to 0} \frac{h(3+3h+h^2)}{h} \\ &=\lim_{h \to 0} 3+3h+h^2 \\ &= 3. \end{aligned}\]

Function \( x^3 + 1\) and its tangent at the point \(1\).

Example 2.

Let \(f\colon \mathbb{R} \to \mathbb{R}\) be the function \(f(x)=ax+b\). We find the derivative of \(f(x)\).

Immediately from the definition we get: \[\begin{aligned}f'(x) &=\lim_{h\to 0} \frac{f(x+h)-f(x)}{h} \\ &=\lim_{h\to 0} \frac{[a(x+h)+b]-[ax+b]}{h} \\ &=\lim_{h\to 0} a \\ &=a.\end{aligned}\]

Here \(a\) is the slope of the tangent line. Note that the derivative at \(x\) does not depend on \(x\) because \(y=ax+b\) is the equation of a line.

Note. When \(a=0\), we get \(f(x) = b\) and \(f'(x) = 0\). The derivative of a constant function is zero.

Example 3.

Let \(g\colon \mathbb{R} \to \mathbb{R}\) be the function \(g(x)=|x|\). Does \(g\) have a derivative at \(0\)?

Now \[g'(x_0)= \begin{cases}+1 & \text{when $x_{0}>0$} \\ -1 & \text{when $x_{0}<0$}\end{cases}\]

The graph \(y=g(x)\) has no tangent at the point \(x_0=0\): \[\frac{g(0+h)-g(0)}{h}= \frac{|0+h|-|0|}{h}=\frac{|h|}{h}=\begin{cases}+1 & \text{for $h>0$}, \\ -1 & \text{for $h<0$}.\end{cases}\] Thus \(g'(0)\) does not exist.

Conclusion. The function \(g\) is not differentiable at the point \(0\).

Remark. Let \(f\colon (a,b)\to \mathbb{R}\). If \(f'(x)\) exists for every \(x\in (a,b)\) then we get a function \(f'\colon (a,b)\to \mathbb{R}\). We write:

| (1) | \(f(x)\) | = \(f^{(0)}(x)\), | |

| (2) | \(f'(x)\) | = \(f^{(1)}(x)\) | = \(\frac{d}{dx}f(x)\), |

| (3) | \(f''(x)\) | = \(f^{(2)}(x)\) | = \(\frac{d^2}{dx^2}f(x)\), |

| (4) | \(f'''(x)\) | = \(f^{(3)}(x)\) | = \(\frac{d^3}{dx^3}f(x)\), |

| ... |

Here \(f''(x)\) is called the second derivative of \(f\) at \(x\), \(f^{(3)}\) is the third derivative, and so on.

We introduce the notation \begin{eqnarray} C^n\bigl( ]a,b[\bigr) =\{ f\colon \, ]a,b[\, \to \mathbb{R} & \mid & f \text{ is } n \text{ times differentiable on the interval } ]a,b[ \nonumber \\ & & \text{ and } f^{(n)} \text{ is continuous}\}. \nonumber \end{eqnarray} These functions are said to be n times continuously differentiable.

Function \(|x|\).

Example 4.

The distance moved by a cyclist (or a car) is given by \(s(t)\). Then the speed at the moment \(t\) is \(s'(t)\) and the acceleration is \(s''(t)\).

Linearization and differential

Properties of derivative

Next we give some useful properties of the derivative. These properties allow us to find derivatives for some familiar classes of functions such as polynomials and rational functions.

Continuity and derivative

If \(f\) is differentiable at the point \(x_0\), then \(f\) is continuous at the point \(x_0\): \[ \lim_{h\to 0} f(x_0+h) = f(x_0).\] Why? Because if \(f\) is differentiable, then we get \[f(x_0)+h\frac{f(x_0+h)-f(x_0)}{h} \rightarrow f(x_0)+0\cdot f'(x_0)=f(x_0),\] as \(h \to 0\).

Note. If a function is continuous at the point \(x_0\), it doesn't have to be differentiable at that point. For example, the function \(g(x) = |x|\) is continuous, but not differentiable at the point \(0\).

Differentiation Rules

Next we will give some important rules which are often applied in practical problems concerning determination of the derivative of a given function.

Suppose that \(f\) and \(g\) are differentiable at \(x\).

A Constant Multiplier

\[(cf)'(x) = cf'(x),\ c \in \mathbb{R}\]

Suppose that \(f\) is differentiable at \(x\). We determine: \[(cf)'(x),\] where \(c\in \mathbb{R}\) is a constant.

\[\begin{aligned}\frac{(cf)(x+h)-(cf)(x)}{h} \ & \ = \ \frac{cf(x+h)-cf(x)}{h} \\ & \ = \ c \ \frac{f(x+h)-f(x)}{h}\end{aligned}\]

As \(h\to 0\), we get \[c \ \frac{f(x+h)-f(x)}{h} \to c f'(x).\]

\(\square\)

The Sum Rule

\[(f+g)'(x) = f'(x) + g'(x)\]

Suppose that \(f\) and \(g\) are differentiable at \(x\). We determine \[(f+g)'(x).\]

By the definition: \[\begin{aligned}\frac{(f+g)(x+h)-(f+g)(x)}{h} \ & \ = \ \frac{[f(x+h)+g(x+h)]-[f(x)+g(x)]}{h} \\ & \ = \ \frac{f(x+h)-f(x)}{h}+\frac{g(x+h)-g(x)}{h}\end{aligned}\]

When \(h\to 0\), we get \[\frac{f(x+h)-f(x)}{h}+\frac{g(x+h)-g(x)}{h}\to \ f'(x)+g'(x)\]

\(\square\)

The Product Rule

\[(fg)'(x) = f'(x)g(x) + f(x)g'(x)\]

Suppose that \(f,g\) and are differentiable at \(x\). We determine \[(fg)'(x).\] \[\begin{aligned}\frac{(fg)(x+h)-(fg)(x)}{h} & = \frac{f(x+h)g(x+h)-f(x)g(x)}{h} \\ & = \frac{f(x+h)g(x+h)-f(x)g(x+h)+f(x)g(x+h)-f(x)g(x)}{h} \\ & = \frac{f(x+h)-f(x)}{h}\ g(x+h)+f(x)\ \frac{g(x+h)-g(x)}{h}\end{aligned}\]

When \(h\to 0\), we get \[\frac{f(x+h)-f(x)}{h}g(x+h)+f(x)\frac{g(x+h)-g(x)}{h}\to f'(x)g(x)+f(x)g'(x).\]

\(\square\)

The Power Rule

\[\frac{d}{dx} x^n = nx^{n-1} \text{, } n \in \mathbb{Z}\]

For \( n\ge 1\) we repeteadly apply the product rule, and obtain \[\begin{aligned}\frac{d}{dx}x^n \ & = \frac{d}{dx}(x\cdot x^{n-1}) \\ & = (\frac{d}{dx}x)x^{n-1}+x\frac{d}{dx}x^{n-1} \\ & \stackrel{dx/dx=1}{=} x^{n-1}+x\frac{d}{dx}x^{n-1} \\ & = x^{n-1}+x\left( x^{n-2}+x\frac{d}{dx}x^{n-2}\right) \\ & = \ldots \\ & = \sum_{k=0}^{n-1} x^{n-1} \\ & = nx^{n-1}.\end{aligned}\]

The case of negative \( n\) is obtained from this and the product rule applied to the identity \( x^n \cdot x^{-n} = 1\).

From the power rule we obtain a formula for the derivative of a polynomial. Let \[P(x)=a_n x^{n}+a_{n-1}x^{n-1}+\ldots+ a_1 x + a_0,\] where \(n\in \mathbb{N}\). Then \[\frac{d}{dx}P(x)=na_nx^{n-1}+(n-1)a_{n-1}x^{n-2}+\ldots +2 a_2 x+a_1.\]

\(\square\)

The Reciprocal Rule

\[\Big(\frac{1}{f}\Big)'(x) = - \frac{f'(x)}{f(x)^2} \text{, } f(x) \neq 0\]

Suppose that \(f\) is differentiable at \(x\) and \(f(x)\neq 0\). We determine \[(\frac{1}{f})'(x).\]

From the definition we obtain: \[\begin{aligned}\frac{(1/f)(x+h)-(1/f)(x)}{h} & = \frac{1/f(x+h)-1/f(x)}{h} \\ & = \frac{\frac{f(x)}{f(x)f(x+h)}-\frac{f(x+h)}{f(x)f(x+h)}}{h} \\ & = \frac{f(x)-f(x+h)}{h}\frac{1}{f(x)f(x+h)}\end{aligned}\]

Because \(f\) is differentiable at \(x\) we get \[\frac{f(x)-f(x+h)}{h}\frac{1}{f(x)f(x+h)}=-f'(x)/f(x)^2,\] as \(h\to 0\).

\(\square\)

The Quotient Rule

\[(f/g)'(x) = \frac{f'(x)g(x)-f(x)g'(x)}{g(x)^2},\ g(x) \neq 0\]

Suppose that \(f,g\) are differentiable at \(x\) and \(g(x)\neq 0\). Then \[\begin{aligned}(f/g)'(x) & = \Big( f \cdot \frac{1}{g}\Big) '(x) \\ & = f'(x)\frac{1}{g(x)}-f(x)\frac{g'(x)}{g(x)^2} \\ & = \frac{f'(x)g(x)-f(x)g'(x)}{g(x)^2}.\end{aligned}\]

\(\square\)

Interactivity. Vary \(x\) and the constant multiplier and see the effect of constant multiplier rule in practice.

Example 1.

\[\frac{d}{dx}(x^{2006}+5x^3+42)=\frac{d}{dx}x^{2006}+5\frac{d}{dx}x^3+42\frac{d}{dx}1=2006x^{2005}+5\cdot 3x^2.\]

Example 2.

\[\begin{aligned}\frac{d}{dx} [(x^4-2)(2x+1)] &= \frac{d}{dx}(x^4-2) \cdot (2x+1) + (x^4-2) \cdot \frac{d}{dx}(2x + 1) \\ &= 4x^3(2x+1) + 2(x^4-2) \\ &= 8x^4+4x^3+2x^4-4 \\ &= 10x^4+4x^3-4.\end{aligned}\]

Note. We can check the answer by deriving it in another way: \[\frac{d}{dx} [(x^4-2)(2x+1)] = \frac{d}{dx} (2x^5 +x^4 -4x -2) = 10x^4 +4x^3 -4.\]

Function \( (x^4-2)(2x+1) \).

Example 3.

For \(x \neq 0\) we get \[\frac{d}{dx} \frac{3}{x^3} = 3 \cdot \frac{d}{dx} \frac{1}{x^3} = -3 \cdot \frac{\frac{d}{dx} x^3}{(x^3)^2} = -3 \cdot \frac{3x^2}{x^6}= - \frac{9}{x^4}.\]

Note. There is another way of solving the problem above by noticing that \(\frac{1}{x^3} = x^{-3}\) and differentiating it as a power: \[\frac{d}{dx} \ \frac{3}{x^3} = 3 \cdot \frac{d}{dx} x^{-3} = 3 \cdot (-3x^{-4})= - \frac{9}{x^4}\]

Example 4.

\[\begin{aligned}\frac{d}{dx} \frac{x^3}{1+x^2} & = \frac{(\frac{d}{dx}x^3)(1+x^2)-x^3\frac{d}{dx}(1+x^2)}{(1+x^2)^2} \\ & = \frac{3x^2(1+x^2)-x^3(2x)}{(1+x^2)^2} \\ & = \frac{3x^2+x^4}{(1+x^2)^2}.\end{aligned}\]

Function \(x^3 / (1+x^2)\).

Rolle's Theorem

If \(f\) is differentiable at a local extremum \(x_0\in \, ]a,b[\), then \(f'(x_0)=0\).

The one-sided limits of the difference quotient have different signs at a local extremum. For example, for a local maximum it holds that \begin{eqnarray} \frac{f(x_0+h)-f(x_0)}{h} = \frac{\text{negative} }{\text{positive}}&\le& 0, \text{ when } h>0, \nonumber \\ \frac{f(x_0+h)-f(x_0)}{h} = \frac{\text{negative}}{\text{negative}}&\ge& 0, \text{ when } h<0 \nonumber \end{eqnarray} and \(|h|\) is so small that \(f(x_0)\) is a maximum on the interval \([x_0-h,x_0+h]\).

L'Hospital's Rule

There are many different versions of this rule, but we present only the simplest one. Let us assume that \(f(x_0)=g(x_0)=0\) and the functions \(f,g\) are differentiable on some interval \(]x_0-\delta,x_0+\delta[\). If \[ \lim_{x\to x_0}\frac{f'(x)}{g'(x)} \] exists, then \[ \lim_{x\to x_0}\frac{f(x)}{g(x)}=\lim_{x\to x_0}\frac{f'(x)}{g'(x)}. \]

In the special case \(g'(x_0)\neq 0\) the proof is simple: \[ \frac{f(x)}{g(x)}=\frac{f(x)-f(x_0)}{g(x)-g(x_0)} = \frac{\bigl( f(x)-f(x_0)\bigr) /(x-x_0)}{\bigl( g(x)-g(x_0)\bigr) /(x-x_0)} \to \frac{f'(x_0)}{g'(x_0)}. \] In the general case we need the so-called generalized mean value theorem, which states that \[ \frac{f(x)}{g(x)} = \frac{f'(c)}{g'(c)} \] for some \(c\in \, ]x_0,x[\). Here we have the same point \(c\) both in the numerator and the denominator, so we do not even need the continuity of the derivatives!

Derivatives of Trigonometric Functions

In this section, we give differentiation formulas for trigonometric functions \(\sin\), \(\cos\) and \(\tan\).

Derivative of Sine

\[\sin'(t)=\cos(t)\]

Function \(\sin(x)\) and its derivative function \(\cos(x)\).

Derivative of Cosine

\[\cos'(t)= - \sin(t)\]

This follows in a similar way as the derivative of Sine, but more easily from the identity \(\cos(t)=\sin(\pi/2-t)\) and the Chain rule to be introduced in the following section.

\(\square\)

Function \(\cos(x)\) and its derivative function \(-\sin(x)\).

Derivative of Tangent

\[\tan'(t) = \frac{1}{\cos^2(t)}=1+\tan^2 t.\]

Because \[\tan(t)=\frac{\sin(t)}{\cos(t)},\] from the quotient rule we obtain \[\tan'(t)=\frac{\sin'(t)\cos(t)-\sin(t)\cos'(t)}{\cos^2(t)}=\frac{\cos^2(t)+\sin^2(t)}{\cos^2(t)}=\begin{cases}\frac{1}{\cos^2(t)} & \\ 1+\tan^2 t.\end{cases}\]

\(\square\)

Function \(\tan(x)\) and its derivative function \(1/\cos^2(x)\).

Example 1.

\[\frac{d}{dx} (3 \sin(x)) = 3 \sin'(x) = 3 \cos(x).\]

Example 2.

\[\frac{d}{dx} \cos^2 (x) = \cos'(x) \cdot \cos(x) + \cos(x) \cdot \cos'(x) = -2\sin(x)\cos(x).\]

Example 3.

\[\begin{aligned} \frac{d}{dx} \frac{\sin(x) + 1}{\cos(x)} &= \frac{d}{dx} \left( \frac{\sin(x)}{\cos(x)} + \frac{1}{\cos(x)} \right) \\ &= \tan'(x) - \frac{\cos'(x)}{\cos^2(x)} \\ &= \frac{1+\sin(x)}{\cos^2 (x)}.\end{aligned}\]

The Chain Rule

In this section we learn a formula for finding the derivative of a composite function. This important formula is known as the Chain Rule.

The Chain Rule.

Let \(f\colon \mathbb{R}\to \mathbb{R}\), \(g\colon \mathbb{R}\to \mathbb{R}\) and \(f \circ g \colon \mathbb{R}\to \mathbb{R}\).

Let \(g\) be differentiable at the point \(x\) and \(f\) at \(g(x)\). Then

\[\frac{d}{dx}f(g(x))=f'(g(x))g'(x).\]

Consider

\[\begin{aligned}\frac{f(g(x+h))-f(g(x))}{h} &= \frac{f(g(x+h))-f(g(x))}{h} \ \frac{g(x+h)-g(x)}{g(x+h)-g(x)} \\ &= \frac{f(g(x+h))-f(g(x))}{g(x+h)-g(x)} \ \frac{g(x+h)-g(x)}{h}.\end{aligned}\]

Now let us write \(k(h):=g(x+h)-g(x)\). Then \(g(x+h)=g(x)+k(h)\) and we get \[\frac{f(g(x+h))-f(g(x))}{h}=\frac{f(g(x)+k(h))-f(g(x))}{k(h)}\frac{g(x+h)-g(x)}{h}.\]

Problem. What if \(k(h)=0\)? Note that one cannot divide by zero.

Solution. Define \[E(k):= \begin{cases}0, & \text{for $k=0$}, \\ \frac{f(g(x)+k)-f(g(x))}{k}-f'(g(x)), & \text{for $k\neq 0$},\end{cases}\] so that \[\frac{f(g(x+h))-f(g(x))}{h}=[E(k(h))+f'(g(x))]\frac{g(x+h)-g(x)}{h}.\] Now, because \(E\) is continuous, we get \[[E(k(h))+f'(g(x))]\frac{g(x+h)-g(x)}{h}\to f'(g(x))g'(x).\] as \(h\to 0\).

\(\square\)

Example 1.

The problem is to differentiate the function \((2x-1)^3\). We take \(f(x) = x^3\) and \(g(x) = 2x-1\) and differentiate the composite function \(f(g(x))\). As \[f'(x) = 3x^2 \text{ and } g'(x) = 2,\] we get \[\frac{d}{dx} (2x-1)^3 = 3(2x-1)^2 \cdot 2 = 6(4x^2-4x+1) = 24x^2-24x+6.\]

Function \((2x-1)^3\) and its derivative function.

Example 2.

We need to differentiate the function \(\sin 3x\). Take \(f(x) = \sin x\) and \(g(x) = 3x\), then differentiate the composite function \(f(g(x))\). \[\frac{d}{dx} \sin 3x = \cos 3x \cdot 3 = 3 \cos 3x.\]

Remark. Let \(h\colon \mathbb{R}\to \mathbb{R}, g\colon \mathbb{R}\to \mathbb{R}\) and \(f\colon \mathbb{R}\to \mathbb{R}\). Now \[\frac{d}{dx}f(g(h(x)))=f'(g(h(x)))\frac{d}{dx}g(h(x))=f'(g(h(x)))g'(h(x))h'(x).\] Similarly, one may obtain even more complex rules for composites of multiple functions.

Function \(\sin 3x\) and its derivative function.

Example 3.

Differentiate the function \(\cos^3 2x\). Take \(f(x) = x^3\), \(g(x) = \cos x\) and \(h(x) = 2x\) and differentiate the composite function \(f(g(h(x)))\). \[\begin{aligned}\frac{d}{dx} \cos^3 2x &= 3(\cos 2x)^2 \cdot \frac{d}{dx} \cos 2x \\ &= 3 \cos^2 2x \cdot (-\sin 2x) \cdot 2 \\ &= -6 \sin 2x \cos^2 2x.\end{aligned}\]

Function \(\cos^3 2x\) and its derivative function.

Extremal Value Problems

We will discuss the Intermediate Value Theorem for differentiable functions, and its connections to extremal value problems.

Definition: Local Maxima and Minima

A function \(f\colon A\to \mathbb{R}\) has a a local maximum at the point \(x_0\in A\), if for some \(h\gt 0\) and for all \(x\in A\) such that \(|x-x_0|\lt h\), we have \(f(x)\leq f(x_0)\).

Similarly, a function \(f\colon A\to \mathbb{R}\) has a local minimum at the point \(x_0\in A\) , if for some \(h>0\) and for all \(x\in A\) such that \(|x-x_0|\lt h\), we have \(f(x)\geq f(x_0)\).

A local extreme is a local maximum or a local minimum.

Remark. If \(x_0\) is a local maximum value and \(f'(x_0)\) exists, then \[\begin{cases}f'(x_0) & =\lim_{h\to 0^{+}}\frac{f(x_0+h)-f(x_0)}{h} \leq 0 \\ f'(x_0) & =\lim_{h\to 0^{-}}\frac{f(x_0+h)-f(x_0)}{h} \geq 0.\end{cases}\] Hence \(f'(x_0)=0\).

We get:

Theorem 1.

Let \(x_0\in [a,b]\) be a local extremal value of a continuous function \(f\colon [a,b]\to \mathbb{R}\). Then either

the derivative \(f'(x_0)\) doesn't exist (this includes also cases \(x_0=a\) and \(x_0=b\)) or

\(f'(x_0)=0\).

Example 1.

Let \(f: \mathbb{R} \to \mathbb{R}\) be defined by \[f(x) = x^3 -3x + 1.\] Then \[f'(x) = 3x^2-3\] and we can see that at the points \(x_0 = -1\) and \(x_0 = 1\) the local maximum and minimum of \(f\) are obtained, \[f'(-1) = 3 \cdot (-1)^2 - 3 = 0 \text{ and } f'(1) = 3 \cdot 1^2 - 3 = 0.\]

Function \(x^3-3x+1\) and its derivative function \(3x^2-3\).

Finding the global extrema

In practice, when we are looking for the local extrema of a given function, we need to check three kinds of points:

the zeros of the derivative

the endpoints of the domain of definition (interval)

points where the function is not differentiable

If we happened to know beforehand that the function has a minimum/maximum, then we start off by finding all the possible local extrema (the points described above), evaluate the function at these points and pick the greatest/smallest of these values.

Example 2.

Let us find the smallest and greatest value of the function \(f\colon [0,2]\to \mathbf{R}\), \(f(x)=x^3-6x\). Since the function is continuous on a closed interval, then it has a maximum and a minimum. Since the function is differentiable, it is sufficient to examine the endpoints of the interval and the zeros of the derivative that are contained in the interval.

The zeros of the derivative: \(f'(x)=3x^2-6=0 \Leftrightarrow x=\pm \sqrt{2}\). Since \(-\sqrt{2}\not\in [0,2]\), we only need to evaluate the function at three points, \(f(0)=0\), \(f(\sqrt{2})=-4\sqrt{2}\) and \(f(2)=-4\). From these we can see that the smallest value of the function is \(-4\sqrt{2}\) and the greatest value is \(0\), respectively.

Next we will formulate a fundamental result for differentiable functions. The basic idea here is that the change on an interval can only happen, if there is change at some point on the inverval.

Theorem 2.

(The Intermediate Value Theorem for Differentiable Functions). Let \(f\colon [a,b]\to \mathbb{R}\) be continuous in the interval \([a,b]\) and differentiable in the interval \((a,b)\). Then \[f'(x_0)=\frac{f(b)-f(a)}{b-a}\] for some \(x_0\in (a,b).\)

Let \(f\) be continuous in the interval \([a,b]\) and differentiable in the interval \((a,b)\). Let us define \[g(x):=f(x)-\frac{f(b)-f(a)}{b-a}(x-a)-f(a).\]

Now \(g(a)=g(b)=0\) and \(g\) is differentiable in the interval \((a,b)\). According to Rolle's Theorem, there exists \(c\in(a,b)\) such that \(g'(c)=0\). Hence \[f'(c)=g'(c)+\frac{f(b)-f(a)}{b-a}=\frac{f(b)-f(a)}{b-a}.\]

\(\square\)

This result has an important application:

Theorem 3.

Let \(f\colon (a,b)\to \mathbb{R}\) be a differentiable function. Then

If for all \(x\in (a,b) \ \ f'(x)\geq 0\), then \(f\) is increasing,

If for all \(x\in (a,b) \ \ f'(x)\leq 0\), then \(f\) is decreasing.

Suppose that \(a \lt x_1 \lt x_2 \lt b\).

Then by Theorem 2 there exists \(x_0\in (x_1,x_2)\) such that \[f'(x_0)=\frac{f(x_2)-f(x_1)}{x_2-x_1}.\]

It follows that \(f(x_2)-f(x_1)=f'(x_0)(x_2-x_1)\).

Hence we may conclude that \(f\) is increasing for \(f'(x_0)\geq 0\) and decreasing for \(f'(x_0)\leq 0\).

Example 3.

For the polynomial \(f(x) = \frac{1}{4} x^4-2x^2-7\) the derivative is \[f'(x) = x^3-4x = x(x^2-4) = 0,\] when \(x=0\), \(x=2\) or \(x=-2\). Now we can draw a table:

| \(x<-2\) | \(-2 \lt x \lt 0\) | \(0 \lt x \lt 2\) | \(x>2\) | |

|---|---|---|---|---|

| \(x\) | \(<0\) | \(<0\) | \(>0\) | \(>0\) |

| \(x^2-4\) | \(>0\) | \(<0\) | \(<0\) | \(>0\) |

| \(f'(x)\) | \(<0\) | \(>0\) | \(<0\) | \(>0\) |

| \(f(x)\) | decr. | incr. | decr. | incr. |

Function \(\frac{1}{4} x^4-2x^2-7\).

Example 4.

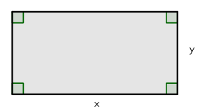

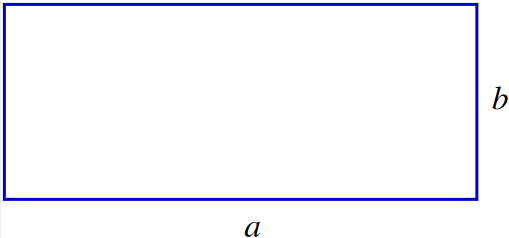

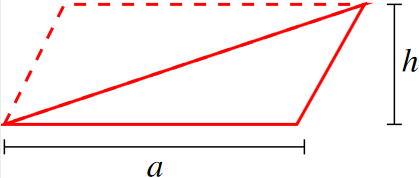

We need to find a rectangle so that its area is \(9\) and it has the least possible perimeter.

Let \(x\ (>0)\) and \(y\ (>0)\) be the sides of the rectangle. Then \(x \cdot y = 9\) and we get \(y=\frac{9}{x}\). Now the perimeter is \[2x+2y = 2x+2 \frac{9}{x} = \frac{2x^2+18}{x}.\] In which point does the function \(f(x) = \frac{2x^2+18}{x}\) get its minimum value? Function \(f\) is continuous and differentiable, when \(x>0\) and using the quotient rule, we get \[f'(x) = \frac{4x \cdot x-(2x^2+18) \cdot 1}{x^2} = \frac{2x^2-18}{x^2}.\] Now \(f'(x) = 0\), when \[\begin{aligned}2x^2-18 &= 0 \\ 2x^2 &= 18 \\ x^2 &= 9 \\ x &= \pm 3\end{aligned}\] but we have defined that \(x>0\) and therefore are only interested in the case \(x=3\). Let's draw a table:

| \(x<3\) | \(x>3\) | |

|---|---|---|

| \(f'(x)\) | \(<0\) | \(>0\) |

| \(f(x)\) | decr. | incr. |

As the function \(f\) is continuous, we now know that it attains its minimum at the point \(x=3\). Now we calculate the other side of the rectangle: \(y=\frac{9}{x}=\frac{9}{3}=3\).

Thus, the rectangle, which has the least possible perimeter is actually a square, which sides are of the length \(3\).

Function \(\frac{2x^2+18}{x}\).

Example 5.

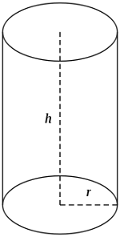

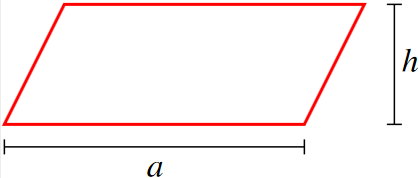

We must make a one litre measure, which is shaped as a right circular cylinder without a lid. The problem is to find the best size of the bottom and the height so that we need the least possible amount of material to make the measure.

Let \(r > 0\) be the radius and \(h > 0\) the height of the cylinder. The volume of the cylinder is \(1\) dm\(^3\) and we can write \(\pi r^2 h = 1\) from which we get \[h = \frac{1}{\pi r^2}.\]

The amount of material needed is the surface area \[A_{\text{bottom}} + A_{\text{side}} = \pi r^2 + 2 \pi r h = \pi r^2 + \frac{2 \pi r}{\pi r^2} = \pi r^2 + \frac{2}{r}.\]

Let function \(f: (0, \infty) \to \mathbb{R}\) be defined by \[f(r) = \pi r^2 + \frac{2}{r}.\] We must find the minimum value for function \(f\), which is continuous and differentiable, when \(r>0\). Using the reciprocal rule, we get \[f'(r) = 2\pi r -2 \cdot \frac{1}{r^2} = \frac{2\pi r^3 - 2}{r^2}.\] Now \(f'(r) = 0\), when \[\begin{aligned}2\pi r^3 - 2 &= 0 \\ 2\pi r^3 &= 2 \\ r^3 &= \frac{1}{\pi} \\ r &= \frac{1}{\sqrt[3]{\pi}}.\end{aligned}\]

Let's draw a table:

| \(r<\frac{1}{\sqrt[3]{\pi}}\) | \(r>\frac{1}{\sqrt[3]{\pi}}\) | |

|---|---|---|

| \(f'(r)\) | \(<0\) | \(>0\) |

| \(f(r)\) | decr. | incr. |

As the function \(f\) is continuous, we now know that it gets its minimum value at the point \(r= \frac{1}{\sqrt[3]{\pi}} \approx 0.683\). Then \[h = \frac{1}{\pi r^2} = \frac{1}{\pi \left(\frac{1}{\sqrt[3]{\pi}}\right)^2} = \frac{1}{\frac{\pi}{\pi^{2/3}}} = \frac{1}{\sqrt[3]{\pi}} \approx 0.683.\]

This means that it would take least materials to make a measure, which is approximately \(2 \cdot 0.683\) dm \( = 1.366\) dm \( \approx 13.7\) cm in diameter and \(0.683\) dm \( \approx 6.8\) cm high.

Function \(\pi r^2 + \frac{2}{r}\).

5. Taylor polynomial

Taylor polynomial

Example

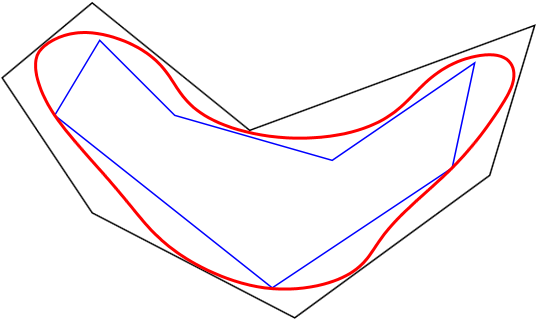

Compare the graph of \(\sin x\) (red) with the graphs of the polynomials \[ x-\frac{x^3}{3!}+\frac{x^5}{5!}-\dots + \frac{(-1)^nx^{2n+1}}{(2n+1)!} \] (blue) for \(n=1,2,3,\dots,12\).

\(\displaystyle\sum_{k=0}^{n}\frac{(-1)^{k}x^{2k+1}}{(2k+1)!}\)

Definition: Taylor polynomial

Let \(f\) be \(k\) times differentiable at the point \(x_{0}\). Then the Taylor polynomial \begin{align} P_n(x)&=P_n(x;x_0)\\\ &=f(x_0)+f'(x_0)(x-x_0)+\frac{f''(x_0)}{2!}(x-x_0)^2+ \\ & \dots +\frac{f^{(n)}(x_0)}{n!}(x-x_0)^n\\ &=\sum_{k=0}^n\frac{f^{(k)}(x_0)}{k!}(x-x_0)^k\\ \end{align} is the best polynomial approximation of degree \(n\) (with respect to the derivative) for a function \(f\), close to the point \(x_0\).

Note. The special case \(x_0=0\) is often called the Maclaurin polynomial.

If \(f\) is \(n\) times differentiable at \(x_0\), then the Taylor polynomial has the same derivatives at \(x_0\) as the function \(f\), up to the order \(n\) (of the derivative).

The reason (case \(x_0=0\)): Let \[ P_n(x)=c_0+c_1x+c_2x^2+c_3x^3+\dots +c_nx^n, \] so that \begin{align} P_n'(x)&=c_1+2c_2x+3c_3x^2+\dots +nc_nx^{n-1}, \\ P_n''(x)&=2c_2+3\cdot 2 c_3x\dots +n(n-1)c_nx^{n-2} \\ P_n'''(x)&=3\cdot 2 c_3\dots +n(n-1)(n-2)c_nx^{n-3} \\ \dots && \\ P^{(k)}(x)&=k!c_k + x\text{ terms} \\ \dots & \\ P^{(n)}(x)&=n!c_n \\ P^{(n+1)}(x)&=0. \end{align}

From these way we obtain the coefficients one by one: \begin{align} c_0= P_n(0)=f(0) &\Rightarrow c_0=f(0) \\ c_1=P_n'(0)=f'(0) &\Rightarrow c_1=f'(0) \\ 2c_2=P_n''(0)=f''(0) &\Rightarrow c_2=\frac{1}{2}f''(0) \\ \vdots & \\ k!c_k=P_n^{(k)}(0)=f^{(k)}(0) &\Rightarrow c_k=\frac{1}{k!}f^{(k)}(0). \\ \vdots &\\ n!c_n=P_n^{(n)}(0)=f^{(n)}(0) &\Rightarrow c_k=\frac{1}{n!}f^{(n)}(0). \end{align} Starting from index \(k=n+1\) we cannot pose any new conditions, since \(P^{(n+1)}(x)=0\).

Taylor's Formula

If the derivative \(f^{(n+1)}\) exists and is continuous on some interval \(I=\, ]x_0-\delta,x_0+\delta[\), then \(f(x)=P_n(x;x_0)+E_n(x)\) and the error term \(E_n(x)\) satisfies \[ E_n(x)=\frac{f^{(n+1)}(c)}{(n+1)!}(x-x_0)^{n+1} \] at some point \(c\in [x_0,x]\subset I\). If there is a constant \(M\) (independent of \(n\)) such that \(|f^{(n+1)}(x)|\le M\) for all \(x\in I\), then \[ |E_n(x)|\le \frac{M}{(n+1)!}|x-x_0|^{n+1} \to 0 \] as \(n\to\infty\).

\neq omitted here (mathematical induction or integral).

Examples of Maclaurin polynomial approximations: \begin{align} \frac{1}{1-x} &\approx 1+x+x^2+\dots +x^n =\sum_{k=0}^{n}x^k\\ e^x&\approx 1+x+\frac{1}{2!}x^2+\frac{1}{3!}x^3+\dots + \frac{1}{n!}x^n =\sum_{k=0}^{n}\frac{x^k}{k!}\\ \ln (1+x)&\approx x-\frac{1}{2}x^2+\frac{1}{3}x^3-\dots + \frac{(-1)^{n-1}}{n}x^n =\sum_{k=1}^{n}\frac{(-1)^{k-1}}{k}x^k\\ \sin x &\approx x-\frac{1}{3!}x^3+\frac{1}{5!}x^5-\dots +\frac{(-1)^n}{(2n+1)!}x^{2n+1} =\sum_{k=0}^{n}\frac{(-1)^k}{(2k+1)!}x^{2k+1}\\ \cos x &\approx 1-\frac{1}{2!}x^2+\frac{1}{4!}x^4-\dots +\frac{(-1)^n}{(2n)!}x^{2n} =\sum_{k=0}^{n}\frac{(-1)^k}{(2k)!}x^{2k} \end{align}

Example

Which polynomial \(P_n(x)\) approximates the function \(\sin x\) in the interval \([-\pi,\pi]\) so that the absolute value of the error is less than \(10^{-6}\)?

We use Taylor's Formula for \(f(x)=\sin x\) at \(x_0=0\). Then \(|f^{(n+1)}(c)|\le 1\) independently of \(n\) and the point \(c\). Also, in the interval in question, we have \(|x-x_0|=|x|\le \pi\). The requirement will be satisfied (at least) if \[ |E_n(x)|\le \frac{1}{(n+1)!}\pi^{n+1} < 10^{-6}. \] This inequality must be solved by trying different values of \(n\); it is true for \(n\ge 16\).

The required approximation is achieved with \(P_{16}(x)\), which fo sine is the same as \(P_{15}(x)\).

Check from graphs: \(P_{13}(x)\) is not enough, so the theoretical bound is sharp!

Taylor polynomial and extreme values

If \(f'(x_0)=0\), then also some higher derivatives may be zero: \[ f'(x_0)=f''(x_0)= \dots = f^{(n)}(x_0) =0,\ f^{(n+1)}(x_0) \neq 0. \] Then the behaviour of \(f\) near \(x=x_0\) is determined by the leading term (after the constant term \(f(x_0)\)) \[ \frac{f^{(n+1)}(x_0)}{(n+1)!}(x-x_0)^{n+1}. \] of the Taylor polynomial.

This leads to the following result:

Extreme values

- If \(n\) is even, then \(x_0\) is not an extreme point of \(f\).

- If \(n\) is odd and \(f^{(n+1)}(x_0)>0\), then \(f\) has a local minimum at \(x_0\).

- If \(n\) is odd and \(f^{(n+1)}(x_0)<0\), then \(f\) has a local maximum at \(x_0\).

Newton's method