Differential and Integral Calculus

| Site: | MyCourses |

| Course: | MS-A0109 - Differential- och integralkalkyl 1, Föreläsning, 6.9.2022-19.10.2022 |

| Book: | Differential and Integral Calculus |

| Printed by: | Guest user |

| Date: | Wednesday, 19 February 2025, 1:03 AM |

1. Sequences

Basics of sequences

This section contains the most important definitions about sequences. Through these definitions the general notion of sequences will be explained, but then restricted to real number sequences.

Note. Characteristics of the set  give certain characteristics to the sequence. Because

give certain characteristics to the sequence. Because  is ordered, the terms of the sequence are ordered.

is ordered, the terms of the sequence are ordered.

Definition: Terms and Indices

A sequence can be denoted denoted as

instead of  The numbers

The numbers  are called the terms of the sequence.

are called the terms of the sequence.

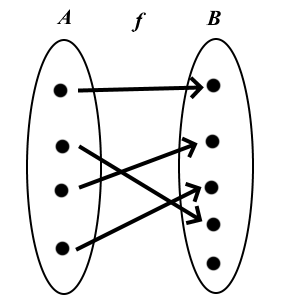

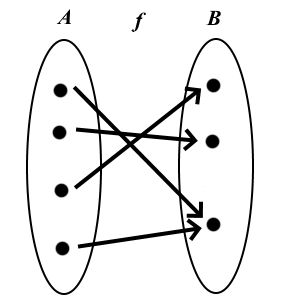

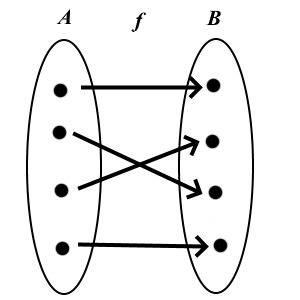

Because of the mapping

we can assign a unique number

we can assign a unique number  to each term. We write this number as a subscript and define it as the index; it follows that we can identify any term of the sequence by its index.

to each term. We write this number as a subscript and define it as the index; it follows that we can identify any term of the sequence by its index.

A few easy examples

Example 1: The sequence of natural numbers

The sequence  defined by

defined by  is called the sequence of natural numbers. Its first few terms are:

is called the sequence of natural numbers. Its first few terms are:

This special sequence has the property that every term is the same as its index.

This special sequence has the property that every term is the same as its index.

![]()

Example 2: The sequence of triangular numbers

Triangular numbers get their name due to the following geometric visualization: Stacking coins to form a triangular shape gives the following diagram:

To the first coin in the first layer we add two coins in a second layer to form the second picture  . In turn, adding three coins to

. In turn, adding three coins to  forms

forms  . From a mathematical point of view, this sequence is the result of summing natural numbers. To calculate the 10th triangular number we need to add the first 10 natural numbers:

. From a mathematical point of view, this sequence is the result of summing natural numbers. To calculate the 10th triangular number we need to add the first 10 natural numbers:

In general form the sequence is defined as:

In general form the sequence is defined as:

This motivates the following definition:

Example 3: Sequence of square numbers

The sequence of square numbers  is defined by:

is defined by:  . The terms of this sequence can also be illustrated by the addition of coins.

. The terms of this sequence can also be illustrated by the addition of coins.

Interestingly, the sum of two consecutive triangular numbers is a square number. So, for example, we have:  and

and  . In general this gives the relationship:

. In general this gives the relationship:

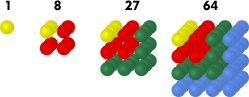

Example 4: Sequence of cube numbers

Analogously to the sequence of square number, we give the definition of cube numbers as  The first terms of the sequence are:

The first terms of the sequence are:  .

.

Example 5.

Example 6.

Given the sequence  with

with  , i.e.

, i.e.

Let

Let  be its 1st difference sequence. Then it follows that

be its 1st difference sequence. Then it follows that

A term of

A term of  has the general form

has the general form

Some important sequences

There are a number of sequences that can be regarded as the basis of many ideas in mathematics, but also can be used in other areas (e.g. physics, biology, or financial calculations) to model real situations. We will consider three of these sequences: the arithmetic sequence, the geometric sequence, and Fibonacci sequence, i.e. the sequence of Fibonacci numbers.

The arithmetic sequence

There are many definitions of the arithmetic sequence:

Definition A: Arithmetic sequence

A sequence  is called the arithmetic sequence, when the difference

is called the arithmetic sequence, when the difference  between two consecutive terms is constant, thus:

between two consecutive terms is constant, thus:

Note: The explicit rule of formation follows directly from definition A:

For the

For the  th term of an arithmetic sequence we also have the recursive formation rule:

th term of an arithmetic sequence we also have the recursive formation rule:

Definition B: Arithmetic sequence

A non-constant sequence  is called an arithmetic sequence (1st order) when its 1st difference sequence is a sequence of constant value.

is called an arithmetic sequence (1st order) when its 1st difference sequence is a sequence of constant value.

This rule of formation gives the arithmetic sequence its name: The middle term of any three consecutive terms is the arithmetic mean of the other two, for example:

Example 1.

The sequence of natural numbers

is an arithmetic sequence, because the difference,

is an arithmetic sequence, because the difference,  , between two consecutive terms is always given as

, between two consecutive terms is always given as  .

.

The geometric sequence

The geometric sequence has multiple definitions:

Definition: Geometric sequence

A sequence  is called a geometric sequence when the ratio of any two consecutive terms is always constant

is called a geometric sequence when the ratio of any two consecutive terms is always constant  , thus

, thus

Note.The recursive relationship

of the terms of the geometric sequence and the explicit formula for the calculation of the n

th term of a geometric sequence

of the terms of the geometric sequence and the explicit formula for the calculation of the n

th term of a geometric sequence

follows directly from the definition.

follows directly from the definition.

Again the name and the rule of formation of this sequence are connected: Here, the middle term of three consecutive terms is the geometric mean of the other two, e.g.:

Example 2.

Let  and

and  be fixed positive numbers. The sequence

be fixed positive numbers. The sequence  with

with  , i.e.

, i.e.

is a geometric sequence. If

is a geometric sequence. If  the sequence is monotonically increasing. If

the sequence is monotonically increasing. If  it is strictly decreasing. The corresponding range

it is strictly decreasing. The corresponding range  is finite in the case

is finite in the case  (namely, a singleton), otherwise it is infinite.

(namely, a singleton), otherwise it is infinite.

The Fibonacci sequence

The Fibonacci sequence is famous because it plays a role in many biological processes, for instance in plant growth, and is frequently found in nature. The recursive definition is:

Definition: Fibonacci sequence

Let  and let

and let

for

for  . The sequence

. The sequence  is then called the Fibonacci sequence. The terms of the sequence are called the Fibonacci numbers.

is then called the Fibonacci sequence. The terms of the sequence are called the Fibonacci numbers.

The sequence is named after the Italian mathematician Leonardo of Pisa (ca. 1200 AD), also known as Fibonacci (son of Bonacci). He considered the size of a rabbit population and discovered the number sequence:

Example 3.

The structure of sunflower heads can be described by a system of two spirals, which radiate out symmetrically but contra rotating from the centre; there are 55 spirals which run clockwise and 34 which run counter-clockwise.

Pineapples behave very similarly. There we have 21 spirals running in one direction and 34 running in the other. Cauliflower, cacti, and fir cones are also constructed in this manner.

Convergence, divergence and limits

The following chapter deals with the convergence of sequences. We will first introduce the idea of zero sequences. After that we will define the concept of general convergence.

Preliminary remark: Absolute value in

The absolute value function  is fundamental in the study of convergence of real number sequences. Therefore we should summarise again some of the main characteristics of the absolute value function:

is fundamental in the study of convergence of real number sequences. Therefore we should summarise again some of the main characteristics of the absolute value function:

Graph of the absolute value function

Theorem: Calculation Rule for the Absolute Value

Parts 1.-3. Results follow directly from the definition and by dividing it up into separate cases of the different signs of  and

and

Part 4. Here we divide the triangle inequality into different cases.

Case 1.

First let  . Then it follows that

. Then it follows that

and the desired inequality is shown.

and the desired inequality is shown.

Finally we consider the case  and

and  . Here we have two subcases:

. Here we have two subcases:

For

we have

we have  and thus

and thus  from the definition of absolute value. Because

from the definition of absolute value. Because  then

then  and therefore also

and therefore also  . Overall we have:

. Overall we have:

For

then

then  . We have

. We have  . Because

. Because  , we have

, we have  and thus

and thus  . Overall we have:

. Overall we have:

The case  and

and  we prove it analogously to the case 3, in which

we prove it analogously to the case 3, in which  and

and  are exchanged.

are exchanged.

Zero sequences

Definition: Zero sequence

A sequence  s called a zero sequence, if for every

s called a zero sequence, if for every  there exists an index

there exists an index  such that

such that

for every

for every  . In this case we also say that the sequence converges to zero.

. In this case we also say that the sequence converges to zero.

Informally: We have a zero sequence, if the terms of the sequence with high enough indices are arbitrarily close to zero.

Example 1.

The sequence  defined by

defined by  , i.e.

, i.e.  is called the harmonic sequence. Clearly, it is positive for all

is called the harmonic sequence. Clearly, it is positive for all  , however as

, however as  increases the absolute value of each term decreases getting closer and closer to zero.

increases the absolute value of each term decreases getting closer and closer to zero.

Take for example  , then choosing the index

, then choosing the index  , it follows that

, it follows that  , for all

, for all  .

.

The harmonic sequence converges to zero

Example 2.

Consider the sequence

Let

Let  .We then obtain the index

.We then obtain the index  in this manner that for all terms

in this manner that for all terms  where

where

.

.

Note. To check whether a sequence is a zero sequence, you must choose an (arbitrary)  where

where  . Then search for a index

. Then search for a index  , after which all terms

, after which all terms  are smaller then said

are smaller then said  .

.

Example 3.

We consider the sequence  , defined by

, defined by

Because of the factors  two consecutive terms have different signs; we call a sequence whose signs change in this way an alternating sequence.

two consecutive terms have different signs; we call a sequence whose signs change in this way an alternating sequence.

We want to show that this sequence is a zero sequence. According to the definition we have to show that for every  there exist

there exist  , such that we have the inequality:

, such that we have the inequality:

for every term

for every term  where

where  .

.

Theorem: Characteristics of Zero sequences

Parts 1 and 2. If  is a zero sequence, then according to the definition there is an index

is a zero sequence, then according to the definition there is an index  , such that

, such that  for every

for every  and an arbitrary

and an arbitrary  . But then we have

. But then we have  ; this proves parts 1 and 2 are correct.

; this proves parts 1 and 2 are correct.

Part 3. If  , then the result is trivial. Let

, then the result is trivial. Let  and choose

and choose  such that

such that

for all

for all  .

Rearranging we get:

.

Rearranging we get:

Part 4.

Because  is a zero sequence, by the definition we have

is a zero sequence, by the definition we have  for all

for all  . Analogously, for the zero sequence

. Analogously, for the zero sequence  there is a

there is a  with

with  for all

for all  .

.

Then for all  it follows (using the triangle inequality) that:

it follows (using the triangle inequality) that:

Convergence, divergence

The concept of zero sequences can be expanded to give us the convergence of general sequences:

Example 4.

We consider the sequence  where

where

By plugging in large values of

By plugging in large values of  , we can see that for

, we can see that for

and therefore we can postulate that the limit is

and therefore we can postulate that the limit is  .

.

For a vigorous proof, we show that for every  there exists an index

there exists an index  , such that for every term

, such that for every term  with

with  the following relationship holds:

the following relationship holds:

Firstly we estimate the inequality:

Now, let  be an arbitrary constant. We then choose the index

be an arbitrary constant. We then choose the index  , such that

, such that

Finally from the above inequality we have:

Finally from the above inequality we have:

Thus we have proven the claim and so by definition

Thus we have proven the claim and so by definition  is the limit of the sequence.

is the limit of the sequence.

If a sequence is convergent, then there is exactly one number which is the limit. This characteristic is called the uniqueness of convergence.

Assume  ; choose

; choose  with

with  Then in particular

Then in particular ![[a-\varepsilon,a+\varepsilon]\cap[b-\varepsilon,b+\varepsilon]=\emptyset. [a-\varepsilon,a+\varepsilon]\cap[b-\varepsilon,b+\varepsilon]=\emptyset.](https://mycourses.aalto.fi/filter/tex/pix.php/4bac60723e473d9697be98685ce7659a.gif)

Because  converges to

converges to  , there is, according to the definition of convergence, a index

, there is, according to the definition of convergence, a index  with

with  for

for  Furthermore, because

Furthermore, because  converges to

converges to  there is also a

there is also a  with

with  for

for  For

For  we have:

we have:

Consequently we have obtained

Consequently we have obtained  , which is a contradiction as

, which is a contradiction as  . Therefore the assumption must be wrong, so

. Therefore the assumption must be wrong, so  .

.

Definition: Divergent, Limit

If provided that a sequence  and an

and an  exist, to which the sequence converges, then the sequence is called convergent and

exist, to which the sequence converges, then the sequence is called convergent and  is called the limit of the sequence, otherwise it is called divergent.

is called the limit of the sequence, otherwise it is called divergent.

Notation.  is convergent to

is convergent to  is also written:

is also written:

Such notation is allowed, as the limit of a sequence is always unique by the above Theorem (provided it exists).

Such notation is allowed, as the limit of a sequence is always unique by the above Theorem (provided it exists).

Rules for convergent sequences

Theorem: Rules

Let  and

and  be sequences with

be sequences with  and

and  . Then for

. Then for  it follows that:

it follows that:

Informally: Sums, differences and products of convergent sequences are convergent.

Part 1. Let  . We must show, that for all

. We must show, that for all  it follows that:

it follows that:

The left hand side we estimate using:

The left hand side we estimate using:

Because  and

and  converge, for each given

converge, for each given  it holds true that:

it holds true that:

Therefore

for all numbers

for all numbers  . Therefore the sequence

. Therefore the sequence

is a zero sequence and the desired inequality is shown.

is a zero sequence and the desired inequality is shown.

Part 2. Let  . We have to show, that for all

. We have to show, that for all

Furthermore an estimation of the left hand side follows:

Furthermore an estimation of the left hand side follows:

We choose a number

We choose a number  , such that

, such that  for all

for all  and

and  . Such a value of

. Such a value of  exists by the Theorem of convergent sequences being bounded. We can then use the estimation:

exists by the Theorem of convergent sequences being bounded. We can then use the estimation:

For all

For all  we have

we have  and

and  , and - putting everything together - the desired inequality it shown.

, and - putting everything together - the desired inequality it shown.

'; }], {

useMathJax : true,

fixed : true,

strokeOpacity : 0.6

});

bars.push(newbar(k));

}

board.fullUpdate();

})();

/* fibonacci sequence */

(function() {

var board = JXG.JSXGraph.initBoard('jxgbox03', {

boundingbox : [-1, 40, 10, -5 ],

axis : false,

shownavigation : false,

showcopyright : false

});

var xaxis = board.create('axis', [[0, 0], [1, 0]], {

straightFirst : false, highlight : false,

drawZero : true,

ticks : { drawLabels : false, minorTicks : 0, majorHeight : 15, label : { highlight : false, offset : [0, -15] } }

});

var yaxis = board.create('axis', [[0, 0], [0, 1]], {

straightFirst : false, highlight : false,

ticks : { minorTicks : 0, majorHeight : 15, label : { offset : [-15, 0 ], position : 'lrt', highlight : false } }

});

xaxis.defaultTicks.ticksFunction = function() { return 1; };

yaxis.defaultTicks.ticksFunction = function() { return 5; };

var a_k = [1, 1];

var newbar = function(k) {

var y;

if(k-1 > a_k.length-1) {

y = a_k[k-3] + a_k[k-2];

a_k.push(y);

} else {

y = a_k[k-1];

}

return board.create('polygon', [[k-1/4, 0], [k+1/4, 0], [k+1/4, y], [k-1/4, y]] , {

vertices : { visible : false },

borders : { strokeColor : 'black', strokeOpacity : .6, highlight : false },

fillColor : '#b2caeb',

fixed : true,

highlight : false

});

}

var bars = [];

for(var i=0; i < 9; i++) {

const k = i+1;

board.create('text', [k-.1, -.6, function() { return '

'; }], {

useMathJax : true,

fixed : true,

strokeOpacity : 0.6

});

bars.push(newbar(k));

}

board.fullUpdate();

})();

/* fibonacci sequence */

(function() {

var board = JXG.JSXGraph.initBoard('jxgbox03', {

boundingbox : [-1, 40, 10, -5 ],

axis : false,

shownavigation : false,

showcopyright : false

});

var xaxis = board.create('axis', [[0, 0], [1, 0]], {

straightFirst : false, highlight : false,

drawZero : true,

ticks : { drawLabels : false, minorTicks : 0, majorHeight : 15, label : { highlight : false, offset : [0, -15] } }

});

var yaxis = board.create('axis', [[0, 0], [0, 1]], {

straightFirst : false, highlight : false,

ticks : { minorTicks : 0, majorHeight : 15, label : { offset : [-15, 0 ], position : 'lrt', highlight : false } }

});

xaxis.defaultTicks.ticksFunction = function() { return 1; };

yaxis.defaultTicks.ticksFunction = function() { return 5; };

var a_k = [1, 1];

var newbar = function(k) {

var y;

if(k-1 > a_k.length-1) {

y = a_k[k-3] + a_k[k-2];

a_k.push(y);

} else {

y = a_k[k-1];

}

return board.create('polygon', [[k-1/4, 0], [k+1/4, 0], [k+1/4, y], [k-1/4, y]] , {

vertices : { visible : false },

borders : { strokeColor : 'black', strokeOpacity : .6, highlight : false },

fillColor : '#b2caeb',

fixed : true,

highlight : false

});

}

var bars = [];

for(var i=0; i < 9; i++) {

const k = i+1;

board.create('text', [k-.1, -.6, function() { return ' '; }], {

useMathJax : true,

fixed : true,

strokeOpacity : 0.6

});

bars.push(newbar(k));

}

board.fullUpdate();

})();

'; }], {

useMathJax : true,

strokeColor : '#2183de',

fontSize : 13,

fixed : true,

highlight : false

});

board.fullUpdate();

})();

'; }], {

useMathJax : true,

fixed : true,

strokeOpacity : 0.6

});

board.create('point', [i, 1/i], {

size : 4,

name : '',

strokeWidth : .5,

strokeColor : 'black',

face : 'diamond',

fillColor : '#cf4490',

fixed : true

});

}

board.fullUpdate();

})();

'; }], {

useMathJax : true,

fixed : true,

strokeOpacity : 0.6

});

bars.push(newbar(k));

}

board.fullUpdate();

})();

'; }], {

useMathJax : true,

strokeColor : '#2183de',

fontSize : 13,

fixed : true,

highlight : false

});

board.fullUpdate();

})();

'; }], {

useMathJax : true,

fixed : true,

strokeOpacity : 0.6

});

board.create('point', [i, 1/i], {

size : 4,

name : '',

strokeWidth : .5,

strokeColor : 'black',

face : 'diamond',

fillColor : '#cf4490',

fixed : true

});

}

board.fullUpdate();

})();

2. Series

Table of Content

Convergence

Convergence

If the sequence of partial sums  has a limit

has a limit  , then

the series of the sequence

, then

the series of the sequence  converges

and its sum is

converges

and its sum is  . This is denoted by

. This is denoted by

Divergence of a series

A series that does ot converge is divergent. This can happen in three different ways:

- the partial sums tend to infinity

- the partial sums tend to minus infinity

- the sequence of partial sums oscillates so that there is no limit.

In the case of a divergent series the symbol  does not really mean anything (it isn't a number). We can then interpret it as the sequence of partial sums, which is always well-defined.

does not really mean anything (it isn't a number). We can then interpret it as the sequence of partial sums, which is always well-defined.

Basic results

Geometric series

A geometric series

converges if

converges if  (or

(or  ), and then its sum is

), and then its sum is  . If

. If  , then

the series diverges.

, then

the series diverges.

Rules of summation

Properties of convergent series:

Note: Compared to limits, there is no similar product-rule for series, because

even for sums of two elements we have

The correct generalization is the Cauchy product of two series, where also

the cross terms are taken into account.

The correct generalization is the Cauchy product of two series, where also

the cross terms are taken into account.

Note: The property  cannot be used to justify the convergence of a series; cf. the following examples.

This is one of the most common elementary mistakes many people do when

studying series!

cannot be used to justify the convergence of a series; cf. the following examples.

This is one of the most common elementary mistakes many people do when

studying series!

Example

Explore the convergence of the series

Solution. The limit of the general term of the series is

As this is different from zero, the series diverges.

As this is different from zero, the series diverges.

This is a classical result first proven in the 14th century by Nicole Oresme after which a number of proofs using different approaches have been published. Here we present two different approaches for comparison.

i) An elementary proof by contradiction. Suppose, for the sake of contradiction, that the harmonic series converges i.e. there exists  such that

such that  . In this case

. In this case

Now, by direct comparison we get

Now, by direct comparison we get

hence following from the Properties of summation it follows that

hence following from the Properties of summation it follows that

But this implies that

But this implies that  , a contradiction. Therefore, the initial assumption that the harmonic series converges must be false and thus the series diverges.

, a contradiction. Therefore, the initial assumption that the harmonic series converges must be false and thus the series diverges.

ii) Proof using integral: Below a histogram with heights  lies the graph of

the function

lies the graph of

the function  , so comparing areas we have

, so comparing areas we have

as

as  .

.

Positive series

Summing a series is often difficult or even impossible in closed form, sometimes only a numerical approximation can be calculated. The first goal then is to find out whether a series is convergent or divergent.

A series  is positive,

if

is positive,

if  for all

for all  .

.

Convergence of positive series is quite straightforward:

Theorem 2.

A positive series converges if and only if the sequence of partial sums is bounded from above.

Why? Because the partial sums form an increasing sequence.

Example

Show that the partial sums of a superharmonic series

satisfy

satisfy  for all

for all  , so the series converges.

, so the series converges.

Solution. This is based on the formula

for

for  , as it implies that

, as it implies that

for all

for all  .

.

This can also be proven with integrals.

Leonhard Euler found out in 1735 that the sum is actually  . His

proof was based on comparison of the series and product expansion of

the sine function.

. His

proof was based on comparison of the series and product expansion of

the sine function.

Absolute convergence

Theorem 3.

An absolutely convergent series converges (in the usual sense) and

This is a special case of the Comparison principle, see later.

Suppose that  converges. We study separately the positive and negative

parts of

converges. We study separately the positive and negative

parts of  :

Let

:

Let  Since

Since  , the positive series

, the positive series  and

and  converge by Theorem 2.

Also,

converge by Theorem 2.

Also,  , so

, so  converges as a difference of two convergent series.

converges as a difference of two convergent series.

Example

Study the convergence of the alternating (= the signs alternate) series

Solution. Since

and the superharmonic series

and the superharmonic series

converges, then the original series is absolutely convergent. Therefore

it also converges in the usual sense.

converges, then the original series is absolutely convergent. Therefore

it also converges in the usual sense.

Alternating harmonic series

The usual convergence and absolute convergence are, however, different concepts:

Example

The alternating harmonic series

converges, but not absolutely.

converges, but not absolutely.

(Idea) Draw a graph of the partial sums  to get the idea that

even and odd index partial sums

to get the idea that

even and odd index partial sums  and

and  are monotone and

converge to the same limit.

are monotone and

converge to the same limit.

The sum of this series is  , which can be derived by integrating the

formula of a geometric series.

, which can be derived by integrating the

formula of a geometric series.

points are joined by line segments for visualization purposes

Convergence tests

Comparison test

The preceeding results generalize to the following:

Proof for Majorant. Since  and

and

then

then  is convergent as a difference of two convergent positive series.

Here we use the elementary convergence property (Theorem 2.) for positive series;

this is not a circular reasoning!

is convergent as a difference of two convergent positive series.

Here we use the elementary convergence property (Theorem 2.) for positive series;

this is not a circular reasoning!

Proof for Minorant. It follows from the assumptions that the partial sums of  tend to infinity, and the series is divergent.

tend to infinity, and the series is divergent.

Example

Solution. Since

for all

for all  , the first series is convergent by the majorant principle.

, the first series is convergent by the majorant principle.

On the other hand,  for all

for all  , so the second series has a divergent harmonic series as a minorant.

The latter series is thus divergent.

, so the second series has a divergent harmonic series as a minorant.

The latter series is thus divergent.

Ratio test

In practice, one of the best ways to study convergence/divergence of a series is the so-called ratio test, where the terms of the sequence are compared to a suitable geometric series:

Limit form of ratio test

(Idea) For a geometric series the ratio of two consecutive terms is

exactly  . According to the ratio test, the convergence of some other

series can also be investigated in a similar way, when the exact ratio

. According to the ratio test, the convergence of some other

series can also be investigated in a similar way, when the exact ratio

is replaced by the above limit.

is replaced by the above limit.

In the formal definition of a limit  . Thus starting from some index

. Thus starting from some index

we have

we have

and the claim follows from Theorem 4.

and the claim follows from Theorem 4.

In the case  the general term of the series does not go to zero,

so the series diverges.

the general term of the series does not go to zero,

so the series diverges.

The last case  does not give any information.

does not give any information.

This case occurs for the harmonic series ( , divergent!) and superharmonic

(

, divergent!) and superharmonic

( , convergent!) series. In these cases the convergence or divergence

must be settled in some other way, as we did before.

, convergent!) series. In these cases the convergence or divergence

must be settled in some other way, as we did before.

3. Continuity

Table of Content

In this section we define a limit of a function  at a point

at a point  . It is assumed that the reader is already familiar with limit of a sequence, the real line and the general concept of a function of one real variable.

. It is assumed that the reader is already familiar with limit of a sequence, the real line and the general concept of a function of one real variable.

Limit of a function

For a subset of real numbers, denoted by  , assume that

, assume that  is such point that there is a sequence of points

is such point that there is a sequence of points  such that

such that  as

as  . Here the set

. Here the set  is often the set of all real numbers, but sometimes an interval (open or closed).

is often the set of all real numbers, but sometimes an interval (open or closed).

Example 1.

Note that it is not necessary for  to be in

to be in  . For example, the sequence

. For example, the sequence  as

as  in

in ![S=]0,2[ S=]0,2[](https://mycourses.aalto.fi/filter/tex/pix.php/29d87817bfac0840e7915f2622194590.gif) , and

, and  for all

for all  but

but  is not in

is not in  .

.

Limit of a function

Example 3.

Example 5.

One-sided limits

An important property of limits is that they are always unique. That is, if  and

and  , then

, then  . Although a function may have only one limit at a given point, it is sometimes useful to study the behavior of the function when

. Although a function may have only one limit at a given point, it is sometimes useful to study the behavior of the function when  approaches the point

approaches the point  from the left or the right side. These limits are called the left and the right limit of the function

from the left or the right side. These limits are called the left and the right limit of the function  at

at  , respectively.

, respectively.

Definition 2: One-sided limits

Suppose  is a set in

is a set in  and

and  is a function defined on the set

is a function defined on the set  . Then we say that

. Then we say that  has a left limit

has a left limit  at

at  , and write

, and write

if,

if,  as

as  for every sequence

for every sequence  in the set

in the set

![S\cap ]-\infty,x_0[ =\{ x\in S : x < x_0 \} S\cap ]-\infty,x_0[ =\{ x\in S : x < x_0 \}](https://mycourses.aalto.fi/filter/tex/pix.php/c65f5d0e098970db4a09f85ad08fada6.gif) , such that

, such that  as

as  .

.

Similarly, we say that  has a right limit

has a right limit  at

at  , and write

, and write

if,

if,  as

as  for every sequence

for every sequence  in the set

in the set

![S\cap ]x_0,\infty[ =\{ x\in S : x_0 < x \} S\cap ]x_0,\infty[ =\{ x\in S : x_0 < x \}](https://mycourses.aalto.fi/filter/tex/pix.php/34e4590988ffbbcc84fe6f2077270c14.gif) , such that

, such that  as

as  .

.

Example 6.

Limit rules

The following limit rules are immediately obtained from the definition and basic algebra of real numbers.

Limits and continuity

In this section, we define continuity of the function. The intutive idea behind continuity is that the graph of a continuous function is a connected curve. However, this is not sufficient as a mathematical definition for several reasons. For example, by using this definition, one cannot easily decide if  is a continuous function or not.

is a continuous function or not.

Example 1.

Example 2.

Let  . We define a function

. We define a function  by

by

Then

Then

Therefore

Therefore  is not continuous at the point

is not continuous at the point  .

.

Some basic properties of continuous functions of one real variable are given next. From the limit rules (Theorem 2) we obtain:

Theorem 3.

The sum, the product and the difference of continuous functions are continuous. Then, in particular, polynomials are continuous functions. If  and

and  are polynomials and

are polynomials and  , then

, then  is continuous at a point

is continuous at a point  .

.

A composition of continuous functions is continuous if it is defined:

Theorem 4.

Let  and

and  . Suppose that

. Suppose that  is continuous at a point

is continuous at a point  and

and  is continuous at

is continuous at  . Then

. Then  is continuous at a point

is continuous at a point  .

.

Note. If  is continuous, then

is continuous, then  is continuous.

is continuous.

Why?

Note. If  and

and  are continuous, then

are continuous, then  and

and  are continuous. (Here

are continuous. (Here  .)

.)

Why?

'}], {

strokeColor : colors[1],

fontSize : 16,

visible : false

});

l[2] = board.create('text', [-4, 3, function() { return '

'}], {

strokeColor : colors[1],

fontSize : 16,

visible : false

});

l[2] = board.create('text', [-4, 3, function() { return ' '}], {

strokeColor : colors[2],

fontSize : 16,

visible : false

});

g[0] = board.create('functiongraph', [f0, -6, 6], {

visible : true,

strokeWidth : 1.5,

strokeColor : colors[0],

highlight : false

});

g[1] = board.create('functiongraph', [f1, -6, 6], {

visible : false,

strokeWidth : 1.5,

strokeColor : colors[1],

highlight : false

});

g[2] = board.create('functiongraph', [f2, -6, 6], {

visible : false,

strokeWidth : 1.5,

strokeColor : colors[2],

highlight : false

});

var currentGraph = g[0];

var currentLabel = l[0];

select.on('drag', function() {

currentGraph.setAttribute({ visible : false });

currentLabel.setAttribute({ visible : false });

currentGraph = g[select.Value()];

currentLabel = l[select.Value()];

currentGraph.setAttribute({ visible : true });

currentLabel.setAttribute({ visible : true, useMathJax : true });

select.setAttribute({ fillColor : colors[select.Value()] });

board.update();

});

board.fullUpdate();

})();

/* Example 2. */

(function() {

var board = JXG.JSXGraph.initBoard('jxgbox16', {

boundingbox : [-3.5, 4.5, 3.5, -1.25],

showcopyright : false,

shownavigation : false});

var xaxis = board.create('axis', [[0, 0], [1, 0]]);

xaxis.removeAllTicks();

var yaxis = board.create('axis', [[0, 0], [0, 1]], {

drawZero : true,

ticks : { majorHeight : 5, minorTicks : 0, ticksDistance : 1.0 }

});

yaxis.defaultTicks.ticksFunction = function() { return 1; };

var xtick = board.create('segment', [[1, .05],[1, -.1]], {

strokeWidth : 1, strokeColor : 'black', strokeOpacity : .4,

highlight : false });

var f = function(x) { return 2; }

var g = function(x) { return 3; }

board.create('functiongraph', [f, -3.5, 1], {

strokeColor : 'black',

strokeWidth : 2,

highlight : false

});

board.create('functiongraph', [g, 1, 3.5], {

strokeColor : 'black',

strokeWidth : 2,

highlight : false

});

board.create('point', [1, f(1)], {

name : '',

fillColor : 'white',

strokeColor : 'black',

strokeWidth : .5,

size : 2,

fixed : true,

showInfobox : false

});

board.create('point', [1, g(1)], {

name : '',

fillColor : 'black',

strokeColor : 'black',

strokeWidth : .5,

size : 2,

fixed : true,

showInfobox : false

});

var x0 = board.create('text', [1, 0, function() { return '

'}], {

strokeColor : colors[2],

fontSize : 16,

visible : false

});

g[0] = board.create('functiongraph', [f0, -6, 6], {

visible : true,

strokeWidth : 1.5,

strokeColor : colors[0],

highlight : false

});

g[1] = board.create('functiongraph', [f1, -6, 6], {

visible : false,

strokeWidth : 1.5,

strokeColor : colors[1],

highlight : false

});

g[2] = board.create('functiongraph', [f2, -6, 6], {

visible : false,

strokeWidth : 1.5,

strokeColor : colors[2],

highlight : false

});

var currentGraph = g[0];

var currentLabel = l[0];

select.on('drag', function() {

currentGraph.setAttribute({ visible : false });

currentLabel.setAttribute({ visible : false });

currentGraph = g[select.Value()];

currentLabel = l[select.Value()];

currentGraph.setAttribute({ visible : true });

currentLabel.setAttribute({ visible : true, useMathJax : true });

select.setAttribute({ fillColor : colors[select.Value()] });

board.update();

});

board.fullUpdate();

})();

/* Example 2. */

(function() {

var board = JXG.JSXGraph.initBoard('jxgbox16', {

boundingbox : [-3.5, 4.5, 3.5, -1.25],

showcopyright : false,

shownavigation : false});

var xaxis = board.create('axis', [[0, 0], [1, 0]]);

xaxis.removeAllTicks();

var yaxis = board.create('axis', [[0, 0], [0, 1]], {

drawZero : true,

ticks : { majorHeight : 5, minorTicks : 0, ticksDistance : 1.0 }

});

yaxis.defaultTicks.ticksFunction = function() { return 1; };

var xtick = board.create('segment', [[1, .05],[1, -.1]], {

strokeWidth : 1, strokeColor : 'black', strokeOpacity : .4,

highlight : false });

var f = function(x) { return 2; }

var g = function(x) { return 3; }

board.create('functiongraph', [f, -3.5, 1], {

strokeColor : 'black',

strokeWidth : 2,

highlight : false

});

board.create('functiongraph', [g, 1, 3.5], {

strokeColor : 'black',

strokeWidth : 2,

highlight : false

});

board.create('point', [1, f(1)], {

name : '',

fillColor : 'white',

strokeColor : 'black',

strokeWidth : .5,

size : 2,

fixed : true,

showInfobox : false

});

board.create('point', [1, g(1)], {

name : '',

fillColor : 'black',

strokeColor : 'black',

strokeWidth : .5,

size : 2,

fixed : true,

showInfobox : false

});

var x0 = board.create('text', [1, 0, function() { return ' '; }], {

useMathJax : true

});

board.fullUpdate();

})();

'; }], {

useMathJax : true

});

board.fullUpdate();

})();

Delta-epsilon definition

The so-called  -definition for continuity is given next. The basic idea behind this test is that, for a function

-definition for continuity is given next. The basic idea behind this test is that, for a function  continuous at

continuous at  , the values of

, the values of  should get closer to

should get closer to  as

as  gets closer to

gets closer to  .

.

This is the standard definition of continuity in mathematics, because it also works for more general classes of functions than ones on this course, but it not used in high-school mathematics. This important definition will be studied in-depth in Analysis 1 / Mathematics 1.

Example 3.

', fillColor : '#bd4444', snapWidth : 0.01,

label : { useMathJax : true, strokeColor : '#bd4444', offset : [0, 25] }});

var x1 = board.create('glider', [1/2, 0, xaxis],

{ name: '

', fillColor : '#bd4444', snapWidth : 0.01,

label : { useMathJax : true, strokeColor : '#bd4444', offset : [0, 25] }});

var x1 = board.create('glider', [1/2, 0, xaxis],

{ name: ' ', strokeColor : 'black', strokeWidth : .5, fillColor : '#446abd', showinfobox : false,

label : { useMathJax : true, strokeColor : '#446abd', offset : [5, 25] } });

/*board.suspendUpdate();*/

var y1 = board.create('point', [0, function(){return f(x1.X());}],

{ name : '

', strokeColor : 'black', strokeWidth : .5, fillColor : '#446abd', showinfobox : false,

label : { useMathJax : true, strokeColor : '#446abd', offset : [5, 25] } });

/*board.suspendUpdate();*/

var y1 = board.create('point', [0, function(){return f(x1.X());}],

{ name : ' ', size : 2, strokeColor : 'black', strokeWidth : .5, fillColor : '#bd4444',

showinfobox : false, highlight : false,

label : { useMathJax : true, strokeColor : '#bd4444', offset : [5, 25] }});

var y2 = board.create('point', [0, function(){return f(x1.X())-s.Value();}], { visible : false });

var y3 = board.create('point', [0, function(){return f(x1.X())+s.Value();}], { visible : false });

var z1 = board.create('point', [function(){return y1.Y()/4;}, function(){return y1.Y();}], { visible : false });

var z2 = board.create('point', [function(){return y2.Y()/4;}, function(){return y2.Y();}], { visible : false });

var z3 = board.create('point', [function(){return y3.Y()/4;}, function(){return y3.Y();}], { visible : false });

var v1 = board.create('segment', [z1, y1], { strokeColor : '#bd4444', strokeWidth : 1, highlight : false });

var v2 = board.create('line', [z2, y2], { strokeColor : '#bd4444', dash : 2, strokeWidth : 1, highlight : false });

var v3 = board.create('line', [z3, y3], { strokeColor: '#bd4444', dash : 2, strokeWidth : 1, highlight : false });

var epsilon = board.create('polygon', [function() { return [-.5, y2.Y()]; }, function() { return [2.5, y2.Y()]; },

function() { return [2.5, y3.Y()]; }, function() { return [-.5, y3.Y()]; }],

{ fillColor : '#bd4444', fillOpacity : .3, highlight : false, vertices : { visible : false }, borders : { visible : false }});

var h1 = board.create('segment', [function() { return x1; }, function() { return z1; }],

{ strokeColor : '#446abd', strokeWidth : 1, highlight : false });

var h2 = board.create('segment', [function() { return [z2.X(), 0]; },

function() { return [z2.X(), 8]}], { strokeColor : '#446abd', dash : 2, strokeWidth : 1, highlight : false });

var h3 = board.create('segment', [function() { return [z3.X(), 0]; },

function() { return [z3.X(), 8]}], { strokeColor : '#446abd', dash : 2, strokeWidth : 1, highlight : false });

var delta = board.create('polygon', [h2.point1, h2.point2, h3.point2, h3.point1],

{ fillColor : '#446abd', fillOpacity : .3, highlight : false, vertices : { visible : false }, borders : { visible : false }});

var txt = board.create('text', [-2.5, .7, function() {

return '

', size : 2, strokeColor : 'black', strokeWidth : .5, fillColor : '#bd4444',

showinfobox : false, highlight : false,

label : { useMathJax : true, strokeColor : '#bd4444', offset : [5, 25] }});

var y2 = board.create('point', [0, function(){return f(x1.X())-s.Value();}], { visible : false });

var y3 = board.create('point', [0, function(){return f(x1.X())+s.Value();}], { visible : false });

var z1 = board.create('point', [function(){return y1.Y()/4;}, function(){return y1.Y();}], { visible : false });

var z2 = board.create('point', [function(){return y2.Y()/4;}, function(){return y2.Y();}], { visible : false });

var z3 = board.create('point', [function(){return y3.Y()/4;}, function(){return y3.Y();}], { visible : false });

var v1 = board.create('segment', [z1, y1], { strokeColor : '#bd4444', strokeWidth : 1, highlight : false });

var v2 = board.create('line', [z2, y2], { strokeColor : '#bd4444', dash : 2, strokeWidth : 1, highlight : false });

var v3 = board.create('line', [z3, y3], { strokeColor: '#bd4444', dash : 2, strokeWidth : 1, highlight : false });

var epsilon = board.create('polygon', [function() { return [-.5, y2.Y()]; }, function() { return [2.5, y2.Y()]; },

function() { return [2.5, y3.Y()]; }, function() { return [-.5, y3.Y()]; }],

{ fillColor : '#bd4444', fillOpacity : .3, highlight : false, vertices : { visible : false }, borders : { visible : false }});

var h1 = board.create('segment', [function() { return x1; }, function() { return z1; }],

{ strokeColor : '#446abd', strokeWidth : 1, highlight : false });

var h2 = board.create('segment', [function() { return [z2.X(), 0]; },

function() { return [z2.X(), 8]}], { strokeColor : '#446abd', dash : 2, strokeWidth : 1, highlight : false });

var h3 = board.create('segment', [function() { return [z3.X(), 0]; },

function() { return [z3.X(), 8]}], { strokeColor : '#446abd', dash : 2, strokeWidth : 1, highlight : false });

var delta = board.create('polygon', [h2.point1, h2.point2, h3.point2, h3.point1],

{ fillColor : '#446abd', fillOpacity : .3, highlight : false, vertices : { visible : false }, borders : { visible : false }});

var txt = board.create('text', [-2.5, .7, function() {

return ' '; }], {

strokeColor : '#446abd', useMathJax : true, fixed : true

});

/*board.unsuspendUpdate();*/

board.fullUpdate();

})();

'; }], {

strokeColor : '#446abd', useMathJax : true, fixed : true

});

/*board.unsuspendUpdate();*/

board.fullUpdate();

})();

Example 4.

Let  . We define a function

. We define a function  by

by

In Example 2 we saw that this function is not continuous at the point

In Example 2 we saw that this function is not continuous at the point  . To prove this using the

. To prove this using the  -test, we need to find some

-test, we need to find some  and some

and some  such that for all

such that for all  ,

,  , but

, but  .

.

Proof. Let  and

and  . By choosing

. By choosing  , we have

, we have

and

and

Therefore by Theorem 5

Therefore by Theorem 5  is not continuous at the point

is not continuous at the point  .

.

', strokeWidth : .3, strokeColor : 'black', fillColor : '#446abd', showinfobox : false,

highlight : false, fixed : true,

label : { useMathJax : true, offset : [5, 25], strokeColor : '#446abd' }});

board.suspendUpdate();

var x2 = board.create('point', [ function(){return x1.X()-s.Value();}, 0],

{ visible : false });

var x3 = board.create('point', [function(){return x1.X()+s.Value();},0],

{ visible : false });

var y1 = board.create('point', [-2, function() { return f(x1.X()); }],

{ size : 2, name: '

', strokeWidth : .3, strokeColor : 'black', fillColor : '#446abd', showinfobox : false,

highlight : false, fixed : true,

label : { useMathJax : true, offset : [5, 25], strokeColor : '#446abd' }});

board.suspendUpdate();

var x2 = board.create('point', [ function(){return x1.X()-s.Value();}, 0],

{ visible : false });

var x3 = board.create('point', [function(){return x1.X()+s.Value();},0],

{ visible : false });

var y1 = board.create('point', [-2, function() { return f(x1.X()); }],

{ size : 2, name: ' ', strokeWidth : .3, strokeColor : 'black', fillColor : '#446abd', showinfobox : false,

highlight : false,

label : { useMathJax : true, offset : [5, 25], strokeColor : '#446abd' } });

var yd = board.create('point', [-2, function() { return f(x2.X()); }], {

size : 2, name: '

', strokeWidth : .3, strokeColor : 'black', fillColor : '#446abd', showinfobox : false,

highlight : false,

label : { useMathJax : true, offset : [5, 25], strokeColor : '#446abd' } });

var yd = board.create('point', [-2, function() { return f(x2.X()); }], {

size : 2, name: ' ', strokeWidth : .3, strokeColor : 'black', fillColor : '#bd4444', showinfobox : false,

highlight : false,

label : { useMathJax : true, offset : [5, 25], strokeColor : '#bd4444' }

});

/* doesn't really do anything atm... */

var dist = function() { if (Math.abs(f(x1)-f(x2.X())) != 0 || Math.abs(f(x1)-f(x3.X())) != 0) { return .5; } else { return 0; } };

var y2 = board.create('point', [0, function() {

return y1.Y()-dist(); }],

{ visible : false });

var y3 = board.create('point', [0, function() {

return y1.Y()+dist(); }],

{ visible : false });

var endpoint1 = board.create('point', [0, 2],

{ name : '', fixed : true, size : 2, fillColor : 'white', strokeWidth : .5, strokeColor : 'black', showinfobox : false });

var endpoint2 = board.create('point', [0, 3],

{ name : '', fixed : true, size : 2, fillColor : 'black', strokeWidth : .5, strokeColor : 'black', showinfobox : false });

var v1 = board.create('segment', [x1, function() { return [x1.X(), y1.Y()]; }],

{ strokeColor : '#446abd', strokeWidth : 1, highlight : false });

var v2 = board.create('line', [x2, function() { return [x2.X(), x2.Y()+1]; }],

{ strokeColor : '#446abd', dash : 2, strokeWidth : 1, highlight : false });

var v3 = board.create('line', [x3, function() { return [x3.X(), x3.Y()+1]; }],

{ strokeColor : '#446abd', dash : 2, strokeWidth : 1, highlight : false });

var delta = board.create('polygon', [function() { return [x2.X(), -5]; }, function() { return [x2.X(), 6]; },

function() { return [x3.X(), 6]; }, function() { return [x3.X(), -5]; }], {

highlight : false, fixed : true, vertices : { visible : false }, borders : { visible : false }, fillColor : '#446abd',

fillOpacity : .2

});

var h1 = board.create('segment', [y1, function() { return [x1.X(), y1.Y()]; }],

{ strokeColor : '#446abd', strokeWidth : 1, highlight : false });

var h2 = board.create('line', [function() { return y2; }, function() { return [y2.X()+1, y2.Y()]; }],

{ strokeColor : '#bd4444', dash : 2, strokeWidth : 1, highlight : false });

var h3 = board.create('line', [function() { return y3; }, function() { return [y3.X()+1, y3.Y()]; }],

{ strokeColor : '#bd4444', dash : 2, strokeWidth : 1, highlight : false });

var epsilon = board.create('polygon', [[-5, 3.5],[10, 3.5],[10, 2.5], [-5, 2.5]], {

highlight : false, fixed : true, vertices : { visible : false }, borders : { visible : false }, fillColor : '#bd4444',

fillOpacity : .2

});

var txt = board.create('text', [4.2, -1.5, function() {

return '

', strokeWidth : .3, strokeColor : 'black', fillColor : '#bd4444', showinfobox : false,

highlight : false,

label : { useMathJax : true, offset : [5, 25], strokeColor : '#bd4444' }

});

/* doesn't really do anything atm... */

var dist = function() { if (Math.abs(f(x1)-f(x2.X())) != 0 || Math.abs(f(x1)-f(x3.X())) != 0) { return .5; } else { return 0; } };

var y2 = board.create('point', [0, function() {

return y1.Y()-dist(); }],

{ visible : false });

var y3 = board.create('point', [0, function() {

return y1.Y()+dist(); }],

{ visible : false });

var endpoint1 = board.create('point', [0, 2],

{ name : '', fixed : true, size : 2, fillColor : 'white', strokeWidth : .5, strokeColor : 'black', showinfobox : false });

var endpoint2 = board.create('point', [0, 3],

{ name : '', fixed : true, size : 2, fillColor : 'black', strokeWidth : .5, strokeColor : 'black', showinfobox : false });

var v1 = board.create('segment', [x1, function() { return [x1.X(), y1.Y()]; }],

{ strokeColor : '#446abd', strokeWidth : 1, highlight : false });

var v2 = board.create('line', [x2, function() { return [x2.X(), x2.Y()+1]; }],

{ strokeColor : '#446abd', dash : 2, strokeWidth : 1, highlight : false });

var v3 = board.create('line', [x3, function() { return [x3.X(), x3.Y()+1]; }],

{ strokeColor : '#446abd', dash : 2, strokeWidth : 1, highlight : false });

var delta = board.create('polygon', [function() { return [x2.X(), -5]; }, function() { return [x2.X(), 6]; },

function() { return [x3.X(), 6]; }, function() { return [x3.X(), -5]; }], {

highlight : false, fixed : true, vertices : { visible : false }, borders : { visible : false }, fillColor : '#446abd',

fillOpacity : .2

});

var h1 = board.create('segment', [y1, function() { return [x1.X(), y1.Y()]; }],

{ strokeColor : '#446abd', strokeWidth : 1, highlight : false });

var h2 = board.create('line', [function() { return y2; }, function() { return [y2.X()+1, y2.Y()]; }],

{ strokeColor : '#bd4444', dash : 2, strokeWidth : 1, highlight : false });

var h3 = board.create('line', [function() { return y3; }, function() { return [y3.X()+1, y3.Y()]; }],

{ strokeColor : '#bd4444', dash : 2, strokeWidth : 1, highlight : false });

var epsilon = board.create('polygon', [[-5, 3.5],[10, 3.5],[10, 2.5], [-5, 2.5]], {

highlight : false, fixed : true, vertices : { visible : false }, borders : { visible : false }, fillColor : '#bd4444',

fillOpacity : .2

});

var txt = board.create('text', [4.2, -1.5, function() {

return ' '/*Math.max(Math.abs(y2.Y() - y1.Y()), Math.abs(y1.Y() - y3.Y())).toFixed(2)*/;

}], { strokeColor: '#bd4444', useMathJax : true, fixed : true });

board.unsuspendUpdate();

board.fullUpdate();

})();

'/*Math.max(Math.abs(y2.Y() - y1.Y()), Math.abs(y1.Y() - y3.Y())).toFixed(2)*/;

}], { strokeColor: '#bd4444', useMathJax : true, fixed : true });

board.unsuspendUpdate();

board.fullUpdate();

})();

Properties of continuous functions

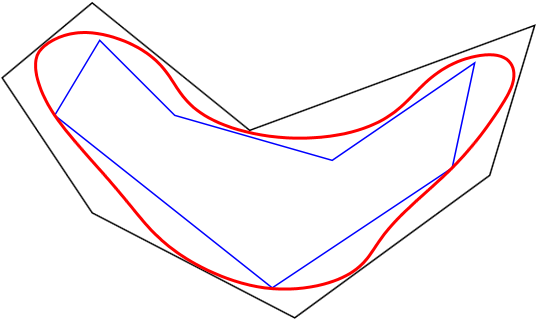

This section contains some fundamental properties of continuous functions. We start with the Intermediate Value Theorem for continuous functions, also known as Bolzano's Theorem. This theorem states that a function that is continuous on a given (closed) real interval, attains all values between its values at endpoints of the interval. Intuitively, this follows from the fact that the graph of a function defined on a real interval is a continuous curve.

The Intermediate Value Theorem.

Example 1.

Let function  , where

, where

Show that there is at least one

Show that there is at least one  such that

such that  .

.

Solution. As a polynomial function,  is continuous. And because

is continuous. And because

and

and

by the Intermediate Value Theorem there is at least one

by the Intermediate Value Theorem there is at least one ![c \in ]-1, 1[ c \in ]-1, 1[](https://mycourses.aalto.fi/filter/tex/pix.php/1d1e7e33b0ebb4086ca0cfea832f3789.gif) such that

such that  .

.

Example 2.

By the Intermediate Value Theorem we have  for

for  or

or  . Similarly,

. Similarly,  for

for  or

or  , because:

, because:

'}], { strokeColor : 'black', fontSize : 13, fixed : true });

var fright = board.create('text', [9.1, 7.6, function() { return '

'}], { strokeColor : 'black', fontSize : 13, fixed : true });

var fright = board.create('text', [9.1, 7.6, function() { return ' '}], { strokeColor : 'black', fontSize : 13, fixed : true });

var xleft = board.create('text', [1.1, -.1, function() { return '

'}], { strokeColor : 'black', fontSize : 13, fixed : true });

var xleft = board.create('text', [1.1, -.1, function() { return ' ' }], { strokeColor : 'black', fontSize : 13, fixed : true });

var xright = board.create('text', [9.1, -.1, function() { return '

' }], { strokeColor : 'black', fontSize : 13, fixed : true });

var xright = board.create('text', [9.1, -.1, function() { return ' ' }], { strokeColor : 'black', fontSize : 13, fixed : true });

l.on('drag', function() {

if(l.point1.Y() >= 7) {

l.point1.moveTo([0, 7]);

l.point2.moveTo([1, 7]);

}

else if(l.point1.Y() <= 4) {

l.point1.moveTo([0, 4]);

l.point2.moveTo([1, 4]);

}

});

var intersections = [];

intersections[0] = board.create('intersection', [l, g, 0], { name : '

' }], { strokeColor : 'black', fontSize : 13, fixed : true });

l.on('drag', function() {

if(l.point1.Y() >= 7) {

l.point1.moveTo([0, 7]);

l.point2.moveTo([1, 7]);

}

else if(l.point1.Y() <= 4) {

l.point1.moveTo([0, 4]);

l.point2.moveTo([1, 4]);

}

});

var intersections = [];

intersections[0] = board.create('intersection', [l, g, 0], { name : ' ', showinfobox : false,

label : { fontSize : 13, offset : [0, -15], strokeColor : 'blue', strokeWidth : .5} });

intersections[1] = board.create('intersection', [l, g, 1], { name : '

', showinfobox : false,

label : { fontSize : 13, offset : [0, -15], strokeColor : 'blue', strokeWidth : .5} });

intersections[1] = board.create('intersection', [l, g, 1], { name : ' ', showinfobox : false,

label : { fontSize : 13, offset : [8, 22], strokeColor : 'blue', strokeWidth : .5} });

intersections[2] = board.create('intersection', [l, g, 2], { name : '

', showinfobox : false,

label : { fontSize : 13, offset : [8, 22], strokeColor : 'blue', strokeWidth : .5} });

intersections[2] = board.create('intersection', [l, g, 2], { name : ' ', showinfobox : false,

label : { fontSize : 13, offset : [0, -15], strokeColor : 'blue', strokeWidth : .5} });

board.unsuspendUpdate();

})();

/* Example 1. */

(function() {

var board = JXG.JSXGraph.initBoard('jxgbox17', {

boundingbox : [-3.5, 2.5, 3.5, -3.5],

showcopyright : false,

shownavigation : false});

var xaxis = board.create('axis', [[0, 0], [1, 0]], {

ticks : { majorHeight : 7, minorTicks : 0, drawZero : true }

});

var yaxis = board.create('axis', [[0, 0], [0, 1]], {

ticks : { majorHeight : 7, minorTicks : 0, drawZero : true }

});

xaxis.defaultTicks.ticksFunction = function() { return 1; };

yaxis.defaultTicks.ticksFunction = function() { return 1; };

var f = function(x) { return (Math.pow(x,5)-3*x-1); }

board.create('functiongraph', [f, -3.5, 3.5], {

strokeColor : 'black',

strokeWidth : 2,

highlight : false

});

board.fullUpdate();

})();

/* Example 2. */

(function() {

var board = JXG.JSXGraph.initBoard('jxgbox18', {

boundingbox : [-3.2, 2.9, 3.2, -2.9],

showcopyright : false,

shownavigation : false});

var xaxis = board.create('axis', [[0, 0], [1, 0]], {

ticks : { majorHeight : 7, minorTicks : 0, drawZero : true }

});

var yaxis = board.create('axis', [[0, 0], [0, 1]], {

ticks : { majorHeight : 7, minorTicks : 0, drawZero : true }

});

xaxis.defaultTicks.ticksFunction = function() { return 1; };

yaxis.defaultTicks.ticksFunction = function() { return 1; };

var f = function(x) { return (x*x*x-x); }

board.create('functiongraph', [f, -3.2, -1], {

strokeColor : 'blue',

strokeWidth : 2,

highlight : false

});

board.create('functiongraph', [f, -1, 0], {

strokeColor : 'red',

strokeWidth : 2,

highlight : false

});

board.create('functiongraph', [f, 0, 1], {

strokeColor : 'blue',

strokeWidth : 2,

highlight : false

});

board.create('functiongraph', [f, 1, 3.2], {

strokeColor : 'red',

strokeWidth : 2,

highlight : false

});

board.fullUpdate();

})();

', showinfobox : false,

label : { fontSize : 13, offset : [0, -15], strokeColor : 'blue', strokeWidth : .5} });

board.unsuspendUpdate();

})();

/* Example 1. */

(function() {

var board = JXG.JSXGraph.initBoard('jxgbox17', {

boundingbox : [-3.5, 2.5, 3.5, -3.5],

showcopyright : false,

shownavigation : false});

var xaxis = board.create('axis', [[0, 0], [1, 0]], {

ticks : { majorHeight : 7, minorTicks : 0, drawZero : true }

});

var yaxis = board.create('axis', [[0, 0], [0, 1]], {

ticks : { majorHeight : 7, minorTicks : 0, drawZero : true }

});

xaxis.defaultTicks.ticksFunction = function() { return 1; };

yaxis.defaultTicks.ticksFunction = function() { return 1; };

var f = function(x) { return (Math.pow(x,5)-3*x-1); }

board.create('functiongraph', [f, -3.5, 3.5], {

strokeColor : 'black',

strokeWidth : 2,

highlight : false

});

board.fullUpdate();

})();

/* Example 2. */

(function() {

var board = JXG.JSXGraph.initBoard('jxgbox18', {

boundingbox : [-3.2, 2.9, 3.2, -2.9],

showcopyright : false,

shownavigation : false});

var xaxis = board.create('axis', [[0, 0], [1, 0]], {

ticks : { majorHeight : 7, minorTicks : 0, drawZero : true }

});

var yaxis = board.create('axis', [[0, 0], [0, 1]], {

ticks : { majorHeight : 7, minorTicks : 0, drawZero : true }

});

xaxis.defaultTicks.ticksFunction = function() { return 1; };

yaxis.defaultTicks.ticksFunction = function() { return 1; };

var f = function(x) { return (x*x*x-x); }

board.create('functiongraph', [f, -3.2, -1], {

strokeColor : 'blue',

strokeWidth : 2,

highlight : false

});

board.create('functiongraph', [f, -1, 0], {

strokeColor : 'red',

strokeWidth : 2,

highlight : false

});

board.create('functiongraph', [f, 0, 1], {

strokeColor : 'blue',

strokeWidth : 2,

highlight : false

});

board.create('functiongraph', [f, 1, 3.2], {

strokeColor : 'red',

strokeWidth : 2,

highlight : false

});

board.fullUpdate();

})();

Next we prove that a continuous function defined on a closed real interval is necessarily bounded. For this result, it is important that the interval is closed. A counter example for an open interval is given after the next theorem.

Example 4.

Example 5.

Let ![f:[-1,2] \to \mathbb{R} f:[-1,2] \to \mathbb{R}](https://mycourses.aalto.fi/filter/tex/pix.php/46f6b5865e82341801d84f074d5275a9.gif) , where

, where

The domain of the function is

The domain of the function is ![[-1,2] [-1,2]](https://mycourses.aalto.fi/filter/tex/pix.php/939b17134a44bb821e6c01efd044b32e.gif) . To determine the range of the function, we first notice that the function is decreasing. We will now show this.

. To determine the range of the function, we first notice that the function is decreasing. We will now show this.

Because  ,

,

and

and

Thus, if

Thus, if  then

then  , which means that the function

, which means that the function  is decreasing.

is decreasing.

We know that a decreasing function has its minimum value in the right endpoint of the interval. Thus, the minimum value of ![f:[-1,2] \to \mathbb{R} f:[-1,2] \to \mathbb{R}](https://mycourses.aalto.fi/filter/tex/pix.php/46f6b5865e82341801d84f074d5275a9.gif) is

is

Respectively, a decreasing function has it's maximum value in the left endpoint of the interval and so the maximum value of

Respectively, a decreasing function has it's maximum value in the left endpoint of the interval and so the maximum value of ![f:[-1,2] \to \mathbb{R} f:[-1,2] \to \mathbb{R}](https://mycourses.aalto.fi/filter/tex/pix.php/46f6b5865e82341801d84f074d5275a9.gif) is

is

As a polynomial function,  is continuous and it therefore has all the values between it's minimum and maximum values. Hence, the range of

is continuous and it therefore has all the values between it's minimum and maximum values. Hence, the range of  is

is ![[-7, 5] [-7, 5]](https://mycourses.aalto.fi/filter/tex/pix.php/e8699196563455925f438ba7d4253294.gif) .

.

Example 6.

Suppose that  is a polynomial. Then

is a polynomial. Then  is continuous on

is continuous on  and, by Theorem 7,

and, by Theorem 7,  is bounded on every closed interval

is bounded on every closed interval ![[a,b] [a,b]](https://mycourses.aalto.fi/filter/tex/pix.php/2c3d331bc98b44e71cb2aae9edadca7e.gif) ,

,  . Furthermore, by Theorem 3,

. Furthermore, by Theorem 3,  must have minimum and maximum values on

must have minimum and maximum values on ![[a,b] [a,b]](https://mycourses.aalto.fi/filter/tex/pix.php/2c3d331bc98b44e71cb2aae9edadca7e.gif) .

.

Note. Theorem 8 is connected to the Intermediate Value Theorem in the following way:

4. Derivative

Derivative

The definition of the derivative of a function is given next. We start with an example illustrating the idea behind the formal definition.

Example 0.

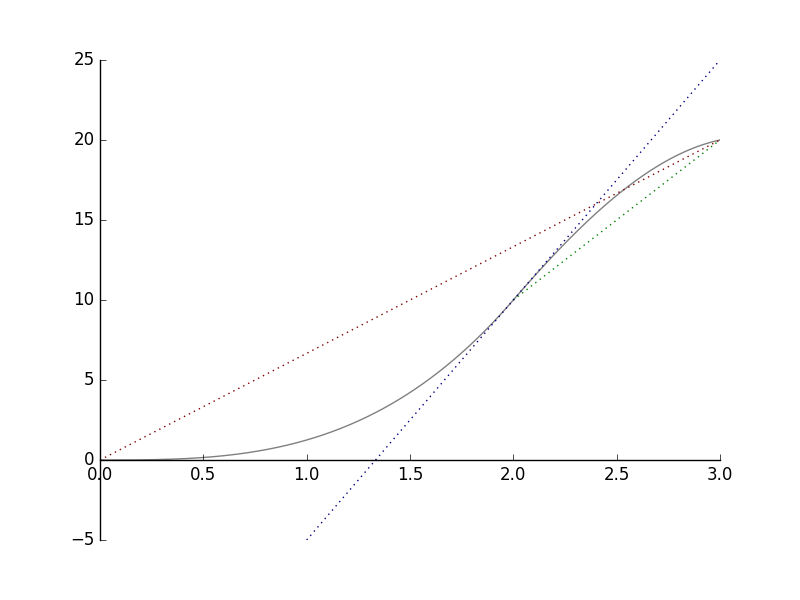

The graph below shows how far a cyclist gets from his starting point.

a) Look at the red line. We can see that in three hours, the cyclist moved  km. The average speed of the whole trip is

km. The average speed of the whole trip is  km/h.

km/h.

b) Now look at the green line. We can see that during the third hour the cyclist moved  km further. That makes the average speed of that time interval

km further. That makes the average speed of that time interval  km/h.

km/h.

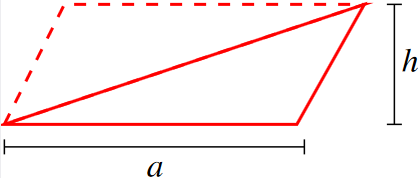

Notice that the slope of the red line is  and that the slope of the blue line is

and that the slope of the blue line is  . These are the same values as the corresponding average speeds.

. These are the same values as the corresponding average speeds.

c) Look at the blue line. It is the tangent of the curve at the point  . Using the same principle as with average speeds, we conclude that after two hours of the departure, the speed of the cyclist was

. Using the same principle as with average speeds, we conclude that after two hours of the departure, the speed of the cyclist was  km/h

km/h  km/h.

km/h.

Now we will proceed to the general definition:

Definition: Derivative

Let  . The derivative of function

. The derivative of function  at the point

at the point  is

is  If

If  exists, then

exists, then  is said to be differentiable at the point

is said to be differentiable at the point  .

.

Note: Since  , then

, then  , and thus the definition can also be written in the form

, and thus the definition can also be written in the form

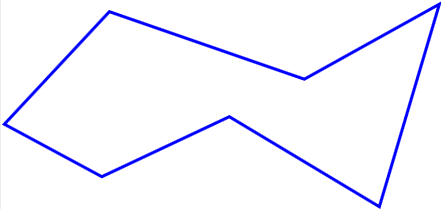

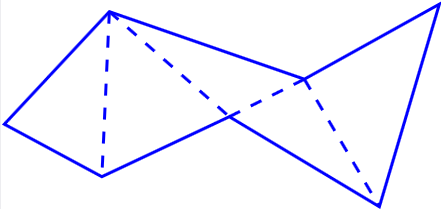

Interpretation. Consider the curve  . Now if we draw a line through the points

. Now if we draw a line through the points  and

and  , we see that the slope of this line is

, we see that the slope of this line is  When

When  , the line intersects with the curve

, the line intersects with the curve  only in the point

only in the point  . This line is the tangent of the curve

. This line is the tangent of the curve  at the point

at the point  and its slope is

and its slope is  which is the derivative of the function

which is the derivative of the function  at

at  . Hence, the tangent is given by the equation

. Hence, the tangent is given by the equation

Interactivity. Move the point of intersection and observe changes on the tangent line of the curve.

Example 2.

Let  be the function

be the function  . We find the derivative of

. We find the derivative of  .

.

Immediately from the definition we get: ![\begin{aligned}f'(x) &=\lim_{h\to 0} \frac{f(x+h)-f(x)}{h} \\ &=\lim_{h\to 0} \frac{[a(x+h)+b]-[ax+b]}{h} \\ &=\lim_{h\to 0} a \\ &=a.\end{aligned} \begin{aligned}f'(x) &=\lim_{h\to 0} \frac{f(x+h)-f(x)}{h} \\ &=\lim_{h\to 0} \frac{[a(x+h)+b]-[ax+b]}{h} \\ &=\lim_{h\to 0} a \\ &=a.\end{aligned}](https://mycourses.aalto.fi/filter/tex/pix.php/5af936863c453fd1ea52d94c4e9b5344.gif)

Here  is the slope of the tangent line. Note that the derivative at

is the slope of the tangent line. Note that the derivative at  does not depend on

does not depend on  because

because  is the equation of a line.

is the equation of a line.

Note. When  , we get

, we get  and

and  . The derivative of a constant function is zero.

. The derivative of a constant function is zero.

Example 3.

Let  be the function

be the function  . Does

. Does  have a derivative at

have a derivative at  ?

?

The graph  has no tangent at the point

has no tangent at the point  :

:  Thus

Thus  does not exist.

does not exist.

Conclusion. The function  is not differentiable at the point

is not differentiable at the point  .

.

Remark. Let  . If

. If  exists for every

exists for every  then we get a function

then we get a function  . We write:

. We write:

| (1) |  |

=  , , |

|

| (2) |  |

=  |

=  , , |

| (3) |  |

=  |

=  , , |

| (4) |  |

=  |

=  , , |

| ... |

Here  is called the second derivative of

is called the second derivative of  at

at  ,

,  is the third derivative, and so on.

is the third derivative, and so on.

We introduce the notation \begin{eqnarray} C^n\bigl( ]a,b[\bigr) =\{ f\colon \, ]a,b[\, \to \mathbb{R} & \mid & f \text{ is } n \text{ times differentiable on the interval } ]a,b[ \nonumber \\ & & \text{ and } f^{(n)} \text{ is continuous}\}. \nonumber \end{eqnarray} These functions are said to be n times continuously differentiable.

Example 4.

The distance moved by a cyclist (or a car) is given by  . Then the speed at the moment

. Then the speed at the moment  is

is  and the acceleration is

and the acceleration is  .

.

Linearization and differential

where the right-handed side is the linearization or the differential of

where the right-handed side is the linearization or the differential of  at

at  . The differential is denoted by

. The differential is denoted by  . The graph of the linearization,

. The graph of the linearization,  is the tangent line drawn on the graph of the function

is the tangent line drawn on the graph of the function  at the point

at the point  . Later, in multi-variable calculus, the true meaning of the differential becomes clear. For now, it is not necessary to get troubled by the details.

. Later, in multi-variable calculus, the true meaning of the differential becomes clear. For now, it is not necessary to get troubled by the details.

Properties of derivative

Next we give some useful properties of the derivative. These properties allow us to find derivatives for some familiar classes of functions such as polynomials and rational functions.

Continuity and derivative

If  is differentiable at the point

is differentiable at the point  , then

, then  is continuous at the point

is continuous at the point  :

:  Why? Because if

Why? Because if  is differentiable, then we get

is differentiable, then we get  as

as  .

.

Note. If a function is continuous at the point  , it doesn't have to be differentiable at that point. For example, the function

, it doesn't have to be differentiable at that point. For example, the function  is continuous, but not differentiable at the point

is continuous, but not differentiable at the point  .

.

Differentiation Rules

For  we repeteadly apply the product rule, and obtain

we repeteadly apply the product rule, and obtain

The case of negative  is obtained from this and the product rule applied to the identity

is obtained from this and the product rule applied to the identity  .

.

From the power rule we obtain a formula for the derivative of a polynomial. Let  where

where  . Then

. Then

Suppose that  is differentiable at

is differentiable at  and

and  . We determine

. We determine

From the definition we obtain:

Example 3.

The one-sided limits of the difference quotient have different signs at a local extremum. For example, for a local maximum it holds that \begin{eqnarray} \frac{f(x_0+h)-f(x_0)}{h} = \frac{\text{negative} }{\text{positive}}&\le& 0, \text{ when } h>0, \nonumber \\ \frac{f(x_0+h)-f(x_0)}{h} = \frac{\text{negative}}{\text{negative}}&\ge& 0, \text{ when } h<0 \nonumber \end{eqnarray} and  is so small that

is so small that  is a maximum on the interval

is a maximum on the interval ![[x_0-h,x_0+h] [x_0-h,x_0+h]](https://mycourses.aalto.fi/filter/tex/pix.php/fa82e5aaac1e1a7465724ab32e282839.gif) .

.

Derivatives of Trigonometric Functions

In this section, we give differentiation formulas for trigonometric functions  ,

,  and

and  .

.

The Chain Rule

In this section we learn a formula for finding the derivative of a composite function. This important formula is known as the Chain Rule.

The Chain Rule.

Proof.Example 1.

Example 2.

Example 3.

Extremal Value Problems

We will discuss the Intermediate Value Theorem for differentiable functions, and its connections to extremal value problems.

Definition: Local Maxima and Minima

A function  has a a local maximum at the point

has a a local maximum at the point  , if for some

, if for some  and for all

and for all  such that

such that  , we have

, we have  .

.

Similarly, a function  has a local minimum at the point

has a local minimum at the point  , if for some

, if for some  and for all

and for all  such that

such that  , we have

, we have  .

.

A local extreme is a local maximum or a local minimum.

Remark. If  is a local maximum value and

is a local maximum value and  exists, then

exists, then

Hence

Hence  .

.

We get:

Example 1.

Let  be defined by

be defined by

Then

Then

and we can see that at the points

and we can see that at the points  and

and  the local maximum and minimum of

the local maximum and minimum of  are obtained,

are obtained,

Finding the global extrema