ELEC-E5510 - Speech Recognition D, Lecture, 26.10.2022-9.12.2022

This course space end date is set to 09.12.2022 Search Courses: ELEC-E5510

Översikt

-

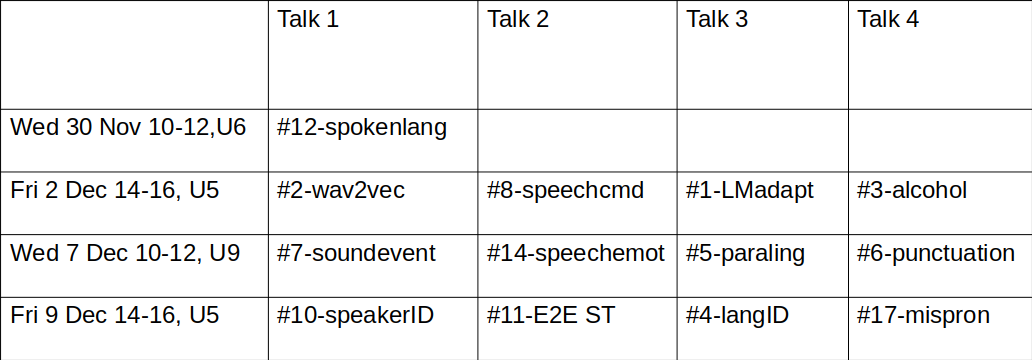

In this list, the 2021 slides will be replaced by the 2022 ones after each lecture is given at latest. The titles may be identical, but the contents are improved each year based on feedback. The project works and their schedule changes each year.

For practicalities, e.g. regarding to the Lecture Quizzes and Exercises, check MyCourses > Course Practicalities

-

-

-

-

Lecture exercise 4: Token passing decoder Inlämningsuppgift

Fill in the last column with final probabilities of the tokens, select the best token and output the corresponding state sequence!

The goal is to verify that you have the learned the idea of the Token passing decoder. The extremely simplified HMM system is almost the same as in the 2B Viterbi algorithm exercise. The observed "sounds" are just quantified to either "A" or "B" with given probabilities in states S0 and S1. Now the task is to find the most likely state sequence that can produce the sequence of sounds A, A, B using a simple language model (LM). The toy LM used here is a look-up table that tells probabilities for different state sequences, (0,1), (0,0,1) etc., up to 3-grams.

Hint: You can either upload an edited source document, a pdf file, a photo of your notes or a text file with numbers. Whatever is easiest for you. To get the activity point the answer does not have to be correct.