ELEC-E5431 - Large scale data analysis D, Lecture, 10.1.2023-15.2.2023

This course space end date is set to 15.02.2023 Search Courses: ELEC-E5431

Topic outline

-

Tuesday 14.2: Guest lecture by Filip Elvander on "Optimal mass transport in signal processing".

Wednesday 15.2 : Guest lecture by Hassan Naseri "Quantum Computing 101".

Participation for guest lectures is mandatory, so please let us know a reason for absence by email.

Basic information

- Credit: 5 ECTS

- Level: M.Sc., also suitable for doctoral studies.

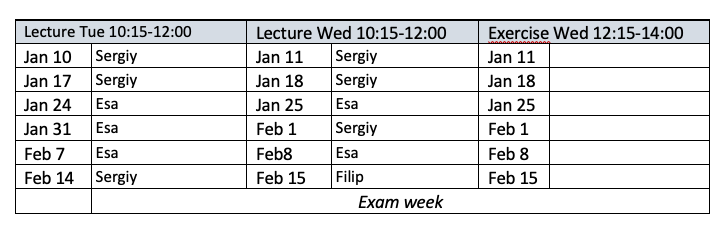

- Teaching: period III (12 x 2h lectures), 10.1.2023 – 15.2.2023

- Exercise sessions: period III (6 x 2h lectures)

- Language: english.

News: teams channel for the course is created. Please join and ask questions regarding the course or homework at Teams channel.

Course personnel

- Prof. Sergiy Vorobyov

- Associate Prof. Esa Ollila

- Teaching assistant (TA): Xinjue Wang

- Guest Lecturer: Assistant professor Filip Elvander

You may contact us by email via format firstname.lastname@aalto.fi

Objectives:

- to give students the tools and training to recognize the problems of processing large scale data that arise in engineering and computer science

- to present the basic theory of such problems, concentrating on results that are useful in computation

- to give students a thorough understanding of how such problems are thought of, modeled and addressed, and some experience in solving them

- to give students the background required to use the methods in their own research work

- to give students sufficient background information in linear algebra, statistical and machine learning tools, optimization, and sparse reconstruction for processing large scale data

- to give students a number of examples of successful application of the techniques for signal processing of large scale data.

Materials and text books

- Course slides, lecture and exercise session notes, videos and codes

There are several useful textbooks (with online pdf-s) available such as:

- Friedman, J., Hastie, T. and Tibshirani, R., 2009. The elements of statistical learning: data mining inference and prediction. Spring, New York, ´USA. [ESL] https://web.stanford.edu/~hastie/ElemStatLearn/printings/ESLII_print12.pdf

- Hastie, T., Tibshirani, R., & Wainwright, M. (2015). Statistical learning with sparsity: the lasso and generalizations. CRC press. https://web.stanford.edu/~hastie/StatLearnSparsity_files/SLS.pdf

- Y. Nesterov, Introductory Lectures on Convex Optimization: A Basic Course

Assessment and grading

- All together 3 Homework assignments. Homework assignments are computer programming assignments which can be done preferably either using python or MATLAB.

About one homework assignment announced for almost each week. The homework will be made available on Mycources and returned via Mycources (follow instructions for each homework).

Grading will be based on homework assignments.

Possible bonus points for active participation for lectures and exercise sessions.

Contents (tentative, subject to change):

Sergiy:

- Optimization Methods for Large Scale Data Analysis (First-Order Accelerated Methods for Smooth Functions, Extension to Non-Smooth Functions - Sub-gradient Methods)

- Optimization Methods for Huge Scale Data Analysis (Stochastic Gradient Methods, Proximal Gradient Method, Mirror Descent, Frank-Wolfe, ADMM, Block-Coordinate Descent)

- Applications to Data Analysis with Structured Sparsity and Machine Learning

Esa:

- Classification and regression tasks, basic principles

- Lasso and its generalisations (including Fused Lasso, square-root Lasso, elastic net). Efficient computation of Lasso.

- Decision Trees, Bagging, Random Forests.

- Boosting and its variants

Guest lecturer (Filip) : TBA